Breaking Out of the Box

CSS is about styling boxes. In fact, the whole web is made of boxes, from the browser viewport to elements on a page. But every once in a while a new feature comes along that makes us rethink our design approach.

Round displays, for example, make it fun to play with circular clip areas. Mobile screen notches and virtual keyboards offer challenges to best organize content that stays clear of them. And dual screen or foldable devices make us rethink how to best use available space in a number of different device postures.

These recent evolutions of the web platform made it both more challenging and more interesting to design products. They’re great opportunities for us to break out of our rectangular boxes.

I’d like to talk about a new feature similar to the above: the Window Controls Overlay for Progressive Web Apps (PWAs).

Progressive Web Apps are blurring the lines between apps and websites. They combine the best of both worlds. On one hand, they’re stable, linkable, searchable, and responsive just like websites. On the other hand, they provide additional powerful capabilities, work offline, and read files just like native apps.

As a design surface, PWAs are really interesting because they challenge us to think about what mixing web and device-native user interfaces can be. On desktop devices in particular, we have more than 40 years of history telling us what applications should look like, and it can be hard to break out of this mental model.

At the end of the day though, PWAs on desktop are constrained to the window they appear in: a rectangle with a title bar at the top.

Here’s what a typical desktop PWA app looks like:

Sure, as the author of a PWA, you get to choose the color of the title bar (using the Web Application Manifest theme_color property), but that’s about it.

What if we could think outside this box, and reclaim the real estate of the app’s entire window? Doing so would give us a chance to make our apps more beautiful and feel more integrated in the operating system.

This is exactly what the Window Controls Overlay offers. This new PWA functionality makes it possible to take advantage of the full surface area of the app, including where the title bar normally appears.

About the title bar and window controls

Let’s start with an explanation of what the title bar and window controls are.

The title bar is the area displayed at the top of an app window, which usually contains the app’s name. Window controls are the affordances, or buttons, that make it possible to minimize, maximize, or close the app’s window, and are also displayed at the top.

Window Controls Overlay removes the physical constraint of the title bar and window controls areas. It frees up the full height of the app window, enabling the title bar and window control buttons to be overlaid on top of the application’s web content.

If you are reading this article on a desktop computer, take a quick look at other apps. Chances are they’re already doing something similar to this. In fact, the very web browser you are using to read this uses the top area to display tabs.

Spotify displays album artwork all the way to the top edge of the application window.

Microsoft Word uses the available title bar space to display the auto-save and search functionalities, and more.

The whole point of this feature is to allow you to make use of this space with your own content while providing a way to account for the window control buttons. And it enables you to offer this modified experience on a range of platforms while not adversely affecting the experience on browsers or devices that don’t support Window Controls Overlay. After all, PWAs are all about progressive enhancement, so this feature is a chance to enhance your app to use this extra space when it’s available.

Let’s use the feature

For the rest of this article, we’ll be working on a demo app to learn more about using the feature.

The demo app is called 1DIV. It’s a simple CSS playground where users can create designs using CSS and a single HTML element.

The app has two pages. The first lists the existing CSS designs you’ve created:

The second page enables you to create and edit CSS designs:

Since I’ve added a simple web manifest and service worker, we can install the app as a PWA on desktop. Here is what it looks like on macOS:

And on Windows:

Our app is looking good, but the white title bar in the first page is wasted space. In the second page, it would be really nice if the design area went all the way to the top of the app window.

Let’s use the Window Controls Overlay feature to improve this.

Enabling Window Controls Overlay

The feature is still experimental at the moment. To try it, you need to enable it in one of the supported browsers.

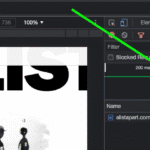

As of now, it has been implemented in Chromium, as a collaboration between Microsoft and Google. We can therefore use it in Chrome or Edge by going to the internal about://flags page, and enabling the Desktop PWA Window Controls Overlay flag.

Using Window Controls Overlay

To use the feature, we need to add the following display_override member to our web app’s manifest file:

{

"name": "1DIV",

"description": "1DIV is a mini CSS playground",

"lang": "en-US",

"start_url": "/",

"theme_color": "#ffffff",

"background_color": "#ffffff",

"display_override": [

"window-controls-overlay"

],

"icons": [

...

]

}

On the surface, the feature is really simple to use. This manifest change is the only thing we need to make the title bar disappear and turn the window controls into an overlay.

However, to provide a great experience for all users regardless of what device or browser they use, and to make the most of the title bar area in our design, we’ll need a bit of CSS and JavaScript code.

Here is what the app looks like now:

The title bar is gone, which is what we wanted, but our logo, search field, and NEW button are partially covered by the window controls because now our layout starts at the top of the window.

It’s similar on Windows, with the difference that the close, maximize, and minimize buttons appear on the right side, grouped together with the PWA control buttons:

Using CSS to keep clear of the window controls

Along with the feature, new CSS environment variables have been introduced:

titlebar-area-xtitlebar-area-ytitlebar-area-widthtitlebar-area-height

You use these variables with the CSS env() function to position your content where the title bar would have been while ensuring it won’t overlap with the window controls. In our case, we’ll use two of the variables to position our header, which contains the logo, search bar, and NEW button.

header {

position: absolute;

left: env(titlebar-area-x, 0);

width: env(titlebar-area-width, 100%);

height: var(--toolbar-height);

}

The titlebar-area-x variable gives us the distance from the left of the viewport to where the title bar would appear, and titlebar-area-width is its width. (Remember, this is not equivalent to the width of the entire viewport, just the title bar portion, which as noted earlier, doesn’t include the window controls.)

By doing this, we make sure our content remains fully visible. We’re also defining fallback values (the second parameter in the env() function) for when the variables are not defined (such as on non-supporting browsers, or when the Windows Control Overlay feature is disabled).

Now our header adapts to its surroundings, and it doesn’t feel like the window control buttons have been added as an afterthought. The app looks a lot more like a native app.

Changing the window controls background color so it blends in

Now let’s take a closer look at our second page: the CSS playground editor.

Not great. Our CSS demo area does go all the way to the top, which is what we wanted, but the way the window controls appear as white rectangles on top of it is quite jarring.

We can fix this by changing the app’s theme color. There are a couple of ways to define it:

- PWAs can define a theme color in the web app manifest file using the theme_color manifest member. This color is then used by the OS in different ways. On desktop platforms, it is used to provide a background color to the title bar and window controls.

- Websites can use the theme-color meta tag as well. It’s used by browsers to customize the color of the UI around the web page. For PWAs, this color can override the manifest

theme_color.

In our case, we can set the manifest theme_color to white to provide the right default color for our app. The OS will read this color value when the app is installed and use it to make the window controls background color white. This color works great for our main page with the list of demos.

The theme-color meta tag can be changed at runtime, using JavaScript. So we can do that to override the white with the right demo background color when one is opened.

Here is the function we’ll use:

function themeWindow(bgColor) {

document.querySelector("meta[name=theme-color]").setAttribute('content', bgColor);

}With this in place, we can imagine how using color and CSS transitions can produce a smooth change from the list page to the demo page, and enable the window control buttons to blend in with the rest of the app’s interface.

Dragging the window

Now, getting rid of the title bar entirely does have an important accessibility consequence: it’s much more difficult to move the application window around.

The title bar provides a sizable area for users to click and drag, but by using the Window Controls Overlay feature, this area becomes limited to where the control buttons are, and users have to very precisely aim between these buttons to move the window.

Fortunately, this can be fixed using CSS with the app-region property. This property is, for now, only supported in Chromium-based browsers and needs the -webkit- vendor prefix.

To make any element of the app become a dragging target for the window, we can use the following:

-webkit-app-region: drag;

It is also possible to explicitly make an element non-draggable:

-webkit-app-region: no-drag;

These options can be useful for us. We can make the entire header a dragging target, but make the search field and NEW button within it non-draggable so they can still be used as normal.

However, because the editor page doesn’t display the header, users wouldn’t be able to drag the window while editing code. So let’s use a different approach. We’ll create another element before our header, also absolutely positioned, and dedicated to dragging the window.

... .drag {

position: absolute;

top: 0;

width: 100%;

height: env(titlebar-area-height, 0);

-webkit-app-region: drag;

}With the above code, we’re making the draggable area span the entire viewport width, and using the titlebar-area-height variable to make it as tall as what the title bar would have been. This way, our draggable area is aligned with the window control buttons as shown below.

And, now, to make sure our search field and button remain usable:

header .search,

header .new {

-webkit-app-region: no-drag;

}With the above code, users can click and drag where the title bar used to be. It is an area that users expect to be able to use to move windows on desktop, and we’re not breaking this expectation, which is good.

Adapting to window resize

It may be useful for an app to know both whether the window controls overlay is visible and when its size changes. In our case, if the user made the window very narrow, there wouldn’t be enough space for the search field, logo, and button to fit, so we’d want to push them down a bit.

The Window Controls Overlay feature comes with a JavaScript API we can use to do this: navigator.windowControlsOverlay.

The API provides three interesting things:

navigator.windowControlsOverlay.visiblelets us know whether the overlay is visible.navigator.windowControlsOverlay.getBoundingClientRect()lets us know the position and size of the title bar area.navigator.windowControlsOverlay.ongeometrychangelets us know when the size or visibility changes.

Let’s use this to be aware of the size of the title bar area and move the header down if it’s too narrow.

if (navigator.windowControlsOverlay) {

navigator.windowControlsOverlay.addEventListener('geometrychange', () => {

const { width } = navigator.windowControlsOverlay.getBoundingClientRect();

document.body.classList.toggle('narrow', width < 250);

});

}In the example above, we set the narrow class on the body of the app if the title bar area is narrower than 250px. We could do something similar with a media query, but using the windowControlsOverlay API has two advantages for our use case:

- It’s only fired when the feature is supported and used; we don’t want to adapt the design otherwise.

- We get the size of the title bar area across operating systems, which is great because the size of the window controls is different on Mac and Windows. Using a media query wouldn’t make it possible for us to know exactly how much space remains.

.narrow header {

top: env(titlebar-area-height, 0);

left: 0;

width: 100%;

}Using the above CSS code, we can move our header down to stay clear of the window control buttons when the window is too narrow, and move the thumbnails down accordingly.

Thirty pixels of exciting design opportunities

Using the Window Controls Overlay feature, we were able to take our simple demo app and turn it into something that feels so much more integrated on desktop devices. Something that reaches out of the usual window constraints and provides a custom experience for its users.

In reality, this feature only gives us about 30 pixels of extra room and comes with challenges on how to deal with the window controls. And yet, this extra room and those challenges can be turned into exciting design opportunities.

More devices of all shapes and forms get invented all the time, and the web keeps on evolving to adapt to them. New features get added to the web platform to allow us, web authors, to integrate more and more deeply with those devices. From watches or foldable devices to desktop computers, we need to evolve our design approach for the web. Building for the web now lets us think outside the rectangular box.

So let’s embrace this. Let’s use the standard technologies already at our disposal, and experiment with new ideas to provide tailored experiences for all devices, all from a single codebase!

If you get a chance to try the Window Controls Overlay feature and have feedback about it, you can open issues on the spec’s repository. It’s still early in the development of this feature, and you can help make it even better. Or, you can take a look at the feature’s existing documentation, or this demo app and its source code.

Designers, (Re)define Success First

About two and a half years ago, I introduced the idea of daily ethical design. It was born out of my frustration with the many obstacles to achieving design that’s usable and equitable; protects people’s privacy, agency, and focus; benefits society; and restores nature. I argued that we need to overcome the inconveniences that prevent us from acting ethically and that we need to elevate design ethics to a more practical level by structurally integrating it into our daily work, processes, and tools.

Unfortunately, we’re still very far from this ideal.

At the time, I didn’t know yet how to structurally integrate ethics. Yes, I had found some tools that had worked for me in previous projects, such as using checklists, assumption tracking, and “dark reality” sessions, but I didn’t manage to apply those in every project. I was still struggling for time and support, and at best I had only partially achieved a higher (moral) quality of design—which is far from my definition of structurally integrated.

I decided to dig deeper for the root causes in business that prevent us from practicing daily ethical design. Now, after much research and experimentation, I believe that I’ve found the key that will let us structurally integrate ethics. And it’s surprisingly simple! But first we need to zoom out to get a better understanding of what we’re up against.

Influence the system

Sadly, we’re trapped in a capitalistic system that reinforces consumerism and inequality, and it’s obsessed with the fantasy of endless growth. Sea levels, temperatures, and our demand for energy continue to rise unchallenged, while the gap between rich and poor continues to widen. Shareholders expect ever-higher returns on their investments, and companies feel forced to set short-term objectives that reflect this. Over the last decades, those objectives have twisted our well-intended human-centered mindset into a powerful machine that promotes ever-higher levels of consumption. When we’re working for an organization that pursues “double-digit growth” or “aggressive sales targets” (which is 99 percent of us), that’s very hard to resist while remaining human friendly. Even with our best intentions, and even though we like to say that we create solutions for people, we’re a part of the problem.

What can we do to change this?

We can start by acting on the right level of the system. Donella H. Meadows, a system thinker, once listed ways to influence a system in order of effectiveness. When you apply these to design, you get:

- At the lowest level of effectiveness, you can affect numbers such as usability scores or the number of design critiques. But none of that will change the direction of a company.

- Similarly, affecting buffers (such as team budgets), stocks (such as the number of designers), flows (such as the number of new hires), and delays (such as the time that it takes to hear about the effect of design) won’t significantly affect a company.

- Focusing instead on feedback loops such as management control, employee recognition, or design-system investments can help a company become better at achieving its objectives. But that doesn’t change the objectives themselves, which means that the organization will still work against your ethical-design ideals.

- The next level, information flows, is what most ethical-design initiatives focus on now: the exchange of ethical methods, toolkits, articles, conferences, workshops, and so on. This is also where ethical design has remained mostly theoretical. We’ve been focusing on the wrong level of the system all this time.

- Take rules, for example—they beat knowledge every time. There can be widely accepted rules, such as how finance works, or a scrum team’s definition of done. But ethical design can also be smothered by unofficial rules meant to maintain profits, often revealed through comments such as “the client didn’t ask for it” or “don’t make it too big.”

- Changing the rules without holding official power is very hard. That’s why the next level is so influential: self-organization. Experimentation, bottom-up initiatives, passion projects, self-steering teams—all of these are examples of self-organization that improve the resilience and creativity of a company. It’s exactly this diversity of viewpoints that’s needed to structurally tackle big systemic issues like consumerism, wealth inequality, and climate change.

- Yet even stronger than self-organization are objectives and metrics. Our companies want to make more money, which means that everything and everyone in the company does their best to… make the company more money. And once I realized that profit is nothing more than a measurement, I understood how crucial a very specific, defined metric can be toward pushing a company in a certain direction.

The takeaway? If we truly want to incorporate ethics into our daily design practice, we must first change the measurable objectives of the company we work for, from the bottom up.

Redefine success

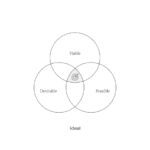

Traditionally, we consider a product or service successful if it’s desirable to humans, technologically feasible, and financially viable. You tend to see these represented as equals; if you type the three words in a search engine, you’ll find diagrams of three equally sized, evenly arranged circles.

But in our hearts, we all know that the three dimensions aren’t equally weighted: it’s viability that ultimately controls whether a product will go live. So a more realistic representation might look like this:

Desirability and feasibility are the means; viability is the goal. Companies—outside of nonprofits and charities—exist to make money.

A genuinely purpose-driven company would try to reverse this dynamic: it would recognize finance for what it was intended for: a means. So both feasibility and viability are means to achieve what the company set out to achieve. It makes intuitive sense: to achieve most anything, you need resources, people, and money. (Fun fact: the Italian language knows no difference between feasibility and viability; both are simply fattibilità.)

But simply swapping viable for desirable isn’t enough to achieve an ethical outcome. Desirability is still linked to consumerism because the associated activities aim to identify what people want—whether it’s good for them or not. Desirability objectives, such as user satisfaction or conversion, don’t consider whether a product is healthy for people. They don’t prevent us from creating products that distract or manipulate people or stop us from contributing to society’s wealth inequality. They’re unsuitable for establishing a healthy balance with nature.

There’s a fourth dimension of success that’s missing: our designs also need to be ethical in the effect that they have on the world.

This is hardly a new idea. Many similar models exist, some calling the fourth dimension accountability, integrity, or responsibility. What I’ve never seen before, however, is the necessary step that comes after: to influence the system as designers and to make ethical design more practical, we must create objectives for ethical design that are achievable and inspirational. There’s no one way to do this because it highly depends on your culture, values, and industry. But I’ll give you the version that I developed with a group of colleagues at a design agency. Consider it a template to get started.

Pursue well-being, equity, and sustainability

We created objectives that address design’s effect on three levels: individual, societal, and global.

An objective on the individual level tells us what success is beyond the typical focus of usability and satisfaction—instead considering matters such as how much time and attention is required from users. We pursued well-being:

We create products and services that allow for people’s health and happiness. Our solutions are calm, transparent, nonaddictive, and nonmisleading. We respect our users’ time, attention, and privacy, and help them make healthy and respectful choices.

An objective on the societal level forces us to consider our impact beyond just the user, widening our attention to the economy, communities, and other indirect stakeholders. We called this objective equity:

We create products and services that have a positive social impact. We consider economic equality, racial justice, and the inclusivity and diversity of people as teams, users, and customer segments. We listen to local culture, communities, and those we affect.

Finally, the objective on the global level aims to ensure that we remain in balance with the only home we have as humanity. Referring to it simply as sustainability, our definition was:

We create products and services that reward sufficiency and reusability. Our solutions support the circular economy: we create value from waste, repurpose products, and prioritize sustainable choices. We deliver functionality instead of ownership, and we limit energy use.

In short, ethical design (to us) meant achieving wellbeing for each user and an equitable value distribution within society through a design that can be sustained by our living planet. When we introduced these objectives in the company, for many colleagues, design ethics and responsible design suddenly became tangible and achievable through practical—and even familiar—actions.

Measure impact

But defining these objectives still isn’t enough. What truly caught the attention of senior management was the fact that we created a way to measure every design project’s well-being, equity, and sustainability.

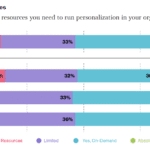

This overview lists example metrics that you can use as you pursue well-being, equity, and sustainability:

There’s a lot of power in measurement. As the saying goes, what gets measured gets done. Donella Meadows once shared this example:

“If the desired system state is national security, and that is defined as the amount of money spent on the military, the system will produce military spending. It may or may not produce national security.”

This phenomenon explains why desirability is a poor indicator of success: it’s typically defined as the increase in customer satisfaction, session length, frequency of use, conversion rate, churn rate, download rate, and so on. But none of these metrics increase the health of people, communities, or ecosystems. What if instead we measured success through metrics for (digital) well-being, such as (reduced) screen time or software energy consumption?

There’s another important message here. Even if we set an objective to build a calm interface, if we were to choose the wrong metric for calmness—say, the number of interface elements—we could still end up with a screen that induces anxiety. Choosing the wrong metric can completely undo good intentions.

Additionally, choosing the right metric is enormously helpful in focusing the design team. Once you go through the exercise of choosing metrics for our objectives, you’re forced to consider what success looks like concretely and how you can prove that you’ve reached your ethical objectives. It also forces you to consider what we as designers have control over: what can I include in my design or change in my process that will lead to the right type of success? The answer to this question brings a lot of clarity and focus.

And finally, it’s good to remember that traditional businesses run on measurements, and managers love to spend much time discussing charts (ideally hockey-stick shaped)—especially if they concern profit, the one-above-all of metrics. For good or ill, to improve the system, to have a serious discussion about ethical design with managers, we’ll need to speak that business language.

Practice daily ethical design

Once you’ve defined your objectives and you have a reasonable idea of the potential metrics for your design project, only then do you have a chance to structurally practice ethical design. It “simply” becomes a matter of using your creativity and choosing from all the knowledge and toolkits already available to you.

I think this is quite exciting! It opens a whole new set of challenges and considerations for the design process. Should you go with that energy-consuming video or would a simple illustration be enough? Which typeface is the most calm and inclusive? Which new tools and methods do you use? When is the website’s end of life? How can you provide the same service while requiring less attention from users? How do you make sure that those who are affected by decisions are there when those decisions are made? How can you measure our effects?

The redefinition of success will completely change what it means to do good design.

There is, however, a final piece of the puzzle that’s missing: convincing your client, product owner, or manager to be mindful of well-being, equity, and sustainability. For this, it’s essential to engage stakeholders in a dedicated kickoff session.

Kick it off or fall back to status quo

The kickoff is the most important meeting that can be so easy to forget to include. It consists of two major phases: 1) the alignment of expectations, and 2) the definition of success.

In the first phase, the entire (design) team goes over the project brief and meets with all the relevant stakeholders. Everyone gets to know one another and express their expectations on the outcome and their contributions to achieving it. Assumptions are raised and discussed. The aim is to get on the same level of understanding and to in turn avoid preventable miscommunications and surprises later in the project.

For example, for a recent freelance project that aimed to design a digital platform that facilitates US student advisors’ documentation and communication, we conducted an online kickoff with the client, a subject-matter expert, and two other designers. We used a combination of canvases on Miro: one with questions from “Manual of Me” (to get to know each other), a Team Canvas (to express expectations), and a version of the Project Canvas to align on scope, timeline, and other practical matters.

The above is the traditional purpose of a kickoff. But just as important as expressing expectations is agreeing on what success means for the project—in terms of desirability, viability, feasibility, and ethics. What are the objectives in each dimension?

Agreement on what success means at such an early stage is crucial because you can rely on it for the remainder of the project. If, for example, the design team wants to build an inclusive app for a diverse user group, they can raise diversity as a specific success criterion during the kickoff. If the client agrees, the team can refer back to that promise throughout the project. “As we agreed in our first meeting, having a diverse user group that includes A and B is necessary to build a successful product. So we do activity X and follow research process Y.” Compare those odds to a situation in which the team didn’t agree to that beforehand and had to ask for permission halfway through the project. The client might argue that that came on top of the agreed scope—and she’d be right.

In the case of this freelance project, to define success I prepared a round canvas that I call the Wheel of Success. It consists of an inner ring, meant to capture ideas for objectives, and a set of outer rings, meant to capture ideas on how to measure those objectives. The rings are divided into five dimensions of successful design: healthy, equitable, sustainable, desirable, feasible, and viable.

We went through each dimension, writing down ideas on digital sticky notes. Then we discussed our ideas and verbally agreed on the most important ones. For example, our client agreed that sustainability and progressive enhancement are important success criteria for the platform. And the subject-matter expert emphasized the importance of including students from low-income and disadvantaged groups in the design process.

After the kickoff, we summarized our ideas and shared understanding in a project brief that captured these aspects:

- the project’s origin and purpose: why are we doing this project?

- the problem definition: what do we want to solve?

- the concrete goals and metrics for each success dimension: what do we want to achieve?

- the scope, process, and role descriptions: how will we achieve it?

With such a brief in place, you can use the agreed-upon objectives and concrete metrics as a checklist of success, and your design team will be ready to pursue the right objective—using the tools, methods, and metrics at their disposal to achieve ethical outcomes.

Conclusion

Over the past year, quite a few colleagues have asked me, “Where do I start with ethical design?” My answer has always been the same: organize a session with your stakeholders to (re)define success. Even though you might not always be 100 percent successful in agreeing on goals that cover all responsibility objectives, that beats the alternative (the status quo) every time. If you want to be an ethical, responsible designer, there’s no skipping this step.

To be even more specific: if you consider yourself a strategic designer, your challenge is to define ethical objectives, set the right metrics, and conduct those kick-off sessions. If you consider yourself a system designer, your starting point is to understand how your industry contributes to consumerism and inequality, understand how finance drives business, and brainstorm which levers are available to influence the system on the highest level. Then redefine success to create the space to exercise those levers.

And for those who consider themselves service designers or UX designers or UI designers: if you truly want to have a positive, meaningful impact, stay away from the toolkits and meetups and conferences for a while. Instead, gather your colleagues and define goals for well-being, equity, and sustainability through design. Engage your stakeholders in a workshop and challenge them to think of ways to achieve and measure those ethical goals. Take their input, make it concrete and visible, ask for their agreement, and hold them to it.

Otherwise, I’m genuinely sorry to say, you’re wasting your precious time and creative energy.

Of course, engaging your stakeholders in this way can be uncomfortable. Many of my colleagues expressed doubts such as “What will the client think of this?,” “Will they take me seriously?,” and “Can’t we just do it within the design team instead?” In fact, a product manager once asked me why ethics couldn’t just be a structured part of the design process—to just do it without spending the effort to define ethical objectives. It’s a tempting idea, right? We wouldn’t have to have difficult discussions with stakeholders about what values or which key-performance indicators to pursue. It would let us focus on what we like and do best: designing.

But as systems theory tells us, that’s not enough. For those of us who aren’t from marginalized groups and have the privilege to be able to speak up and be heard, that uncomfortable space is exactly where we need to be if we truly want to make a difference. We can’t remain within the design-for-designers bubble, enjoying our privileged working-from-home situation, disconnected from the real world out there. For those of us who have the possibility to speak up and be heard: if we solely keep talking about ethical design and it remains at the level of articles and toolkits—we’re not designing ethically. It’s just theory. We need to actively engage our colleagues and clients by challenging them to redefine success in business.

With a bit of courage, determination, and focus, we can break out of this cage that finance and business-as-usual have built around us and become facilitators of a new type of business that can see beyond financial value. We just need to agree on the right objectives at the start of each design project, find the right metrics, and realize that we already have everything that we need to get started. That’s what it means to do daily ethical design.

For their inspiration and support over the years, I would like to thank Emanuela Cozzi Schettini, José Gallegos, Annegret Bönemann, Ian Dorr, Vera Rademaker, Virginia Rispoli, Cecilia Scolaro, Rouzbeh Amini, and many others.

Mobile-First CSS: Is It Time for a Rethink?

The mobile-first design methodology is great—it focuses on what really matters to the user, it’s well-practiced, and it’s been a common design pattern for years. So developing your CSS mobile-first should also be great, too…right?

Well, not necessarily. Classic mobile-first CSS development is based on the principle of overwriting style declarations: you begin your CSS with default style declarations, and overwrite and/or add new styles as you add breakpoints with min-width media queries for larger viewports (for a good overview see “What is Mobile First CSS and Why Does It Rock?”). But all those exceptions create complexity and inefficiency, which in turn can lead to an increased testing effort and a code base that’s harder to maintain. Admit it—how many of us willingly want that?

On your own projects, mobile-first CSS may yet be the best tool for the job, but first you need to evaluate just how appropriate it is in light of the visual design and user interactions you’re working on. To help you get started, here’s how I go about tackling the factors you need to watch for, and I’ll discuss some alternate solutions if mobile-first doesn’t seem to suit your project.

Advantages of mobile-first

Some of the things to like with mobile-first CSS development—and why it’s been the de facto development methodology for so long—make a lot of sense:

Development hierarchy. One thing you undoubtedly get from mobile-first is a nice development hierarchy—you just focus on the mobile view and get developing.

Tried and tested. It’s a tried and tested methodology that’s worked for years for a reason: it solves a problem really well.

Prioritizes the mobile view. The mobile view is the simplest and arguably the most important, as it encompasses all the key user journeys, and often accounts for a higher proportion of user visits (depending on the project).

Prevents desktop-centric development. As development is done using desktop computers, it can be tempting to initially focus on the desktop view. But thinking about mobile from the start prevents us from getting stuck later on; no one wants to spend their time retrofitting a desktop-centric site to work on mobile devices!

Disadvantages of mobile-first

Setting style declarations and then overwriting them at higher breakpoints can lead to undesirable ramifications:

More complexity. The farther up the breakpoint hierarchy you go, the more unnecessary code you inherit from lower breakpoints.

Higher CSS specificity. Styles that have been reverted to their browser default value in a class name declaration now have a higher specificity. This can be a headache on large projects when you want to keep the CSS selectors as simple as possible.

Requires more regression testing. Changes to the CSS at a lower view (like adding a new style) requires all higher breakpoints to be regression tested.

The browser can’t prioritize CSS downloads. At wider breakpoints, classic mobile-first min-width media queries don’t leverage the browser’s capability to download CSS files in priority order.

The problem of property value overrides

There is nothing inherently wrong with overwriting values; CSS was designed to do just that. Still, inheriting incorrect values is unhelpful and can be burdensome and inefficient. It can also lead to increased style specificity when you have to overwrite styles to reset them back to their defaults, something that may cause issues later on, especially if you are using a combination of bespoke CSS and utility classes. We won’t be able to use a utility class for a style that has been reset with a higher specificity.

With this in mind, I’m developing CSS with a focus on the default values much more these days. Since there’s no specific order, and no chains of specific values to keep track of, this frees me to develop breakpoints simultaneously. I concentrate on finding common styles and isolating the specific exceptions in closed media query ranges (that is, any range with a max-width set).

This approach opens up some opportunities, as you can look at each breakpoint as a clean slate. If a component’s layout looks like it should be based on Flexbox at all breakpoints, it’s fine and can be coded in the default style sheet. But if it looks like Grid would be much better for large screens and Flexbox for mobile, these can both be done entirely independently when the CSS is put into closed media query ranges. Also, developing simultaneously requires you to have a good understanding of any given component in all breakpoints up front. This can help surface issues in the design earlier in the development process. We don’t want to get stuck down a rabbit hole building a complex component for mobile, and then get the designs for desktop and find they are equally complex and incompatible with the HTML we created for the mobile view!

Though this approach isn’t going to suit everyone, I encourage you to give it a try. There are plenty of tools out there to help with concurrent development, such as Responsively App, Blisk, and many others.

Having said that, I don’t feel the order itself is particularly relevant. If you are comfortable with focusing on the mobile view, have a good understanding of the requirements for other breakpoints, and prefer to work on one device at a time, then by all means stick with the classic development order. The important thing is to identify common styles and exceptions so you can put them in the relevant stylesheet—a sort of manual tree-shaking process! Personally, I find this a little easier when working on a component across breakpoints, but that’s by no means a requirement.

Closed media query ranges in practice

In classic mobile-first CSS we overwrite the styles, but we can avoid this by using media query ranges. To illustrate the difference (I’m using SCSS for brevity), let’s assume there are three visual designs:

- smaller than 768

- from 768 to below 1024

- 1024 and anything larger

Take a simple example where a block-level element has a default padding of “20px,” which is overwritten at tablet to be “40px” and set back to “20px” on desktop.

Classic | Closed media query range |

The subtle difference is that the mobile-first example sets the default padding to “20px” and then overwrites it at each breakpoint, setting it three times in total. In contrast, the second example sets the default padding to “20px” and only overrides it at the relevant breakpoint where it isn’t the default value (in this instance, tablet is the exception).

The goal is to:

- Only set styles when needed.

- Not set them with the expectation of overwriting them later on, again and again.

To this end, closed media query ranges are our best friend. If we need to make a change to any given view, we make it in the CSS media query range that applies to the specific breakpoint. We’ll be much less likely to introduce unwanted alterations, and our regression testing only needs to focus on the breakpoint we have actually edited.

Taking the above example, if we find that .my-block spacing on desktop is already accounted for by the margin at that breakpoint, and since we want to remove the padding altogether, we could do this by setting the mobile padding in a closed media query range.

.my-block {

@media (max-width: 767.98px) {

padding: 20px;

}

@media (min-width: 768px) and (max-width: 1023.98px) {

padding: 40px;

}

}The browser default padding for our block is “0,” so instead of adding a desktop media query and using unset or “0” for the padding value (which we would need with mobile-first), we can wrap the mobile padding in a closed media query (since it is now also an exception) so it won’t get picked up at wider breakpoints. At the desktop breakpoint, we won’t need to set any padding style, as we want the browser default value.

Bundling versus separating the CSS

Back in the day, keeping the number of requests to a minimum was very important due to the browser’s limit of concurrent requests (typically around six). As a consequence, the use of image sprites and CSS bundling was the norm, with all the CSS being downloaded in one go, as one stylesheet with highest priority.

With HTTP/2 and HTTP/3 now on the scene, the number of requests is no longer the big deal it used to be. This allows us to separate the CSS into multiple files by media query. The clear benefit of this is the browser can now request the CSS it currently needs with a higher priority than the CSS it doesn’t. This is more performant and can reduce the overall time page rendering is blocked.

Which HTTP version are you using?

To determine which version of HTTP you’re using, go to your website and open your browser’s dev tools. Next, select the Network tab and make sure the Protocol column is visible. If “h2” is listed under Protocol, it means HTTP/2 is being used.

Note: to view the Protocol in your browser’s dev tools, go to the Network tab, reload your page, right-click any column header (e.g., Name), and check the Protocol column.

Also, if your site is still using HTTP/1...WHY?!! What are you waiting for? There is excellent user support for HTTP/2.

Splitting the CSS

Separating the CSS into individual files is a worthwhile task. Linking the separate CSS files using the relevant media attribute allows the browser to identify which files are needed immediately (because they’re render-blocking) and which can be deferred. Based on this, it allocates each file an appropriate priority.

In the following example of a website visited on a mobile breakpoint, we can see the mobile and default CSS are loaded with “Highest” priority, as they are currently needed to render the page. The remaining CSS files (print, tablet, and desktop) are still downloaded in case they’ll be needed later, but with “Lowest” priority.

With bundled CSS, the browser will have to download the CSS file and parse it before rendering can start.

While, as noted, with the CSS separated into different files linked and marked up with the relevant media attribute, the browser can prioritize the files it currently needs. Using closed media query ranges allows the browser to do this at all widths, as opposed to classic mobile-first min-width queries, where the desktop browser would have to download all the CSS with Highest priority. We can’t assume that desktop users always have a fast connection. For instance, in many rural areas, internet connection speeds are still slow.

The media queries and number of separate CSS files will vary from project to project based on project requirements, but might look similar to the example below.

Bundled CSS This single file contains all the CSS, including all media queries, and it will be downloaded with Highest priority. | Separated CSS Separating the CSS and specifying a |

Depending on the project’s deployment strategy, a change to one file (mobile.css, for example) would only require the QA team to regression test on devices in that specific media query range. Compare that to the prospect of deploying the single bundled site.css file, an approach that would normally trigger a full regression test.

Moving on

The uptake of mobile-first CSS was a really important milestone in web development; it has helped front-end developers focus on mobile web applications, rather than developing sites on desktop and then attempting to retrofit them to work on other devices.

I don’t think anyone wants to return to that development model again, but it’s important we don’t lose sight of the issue it highlighted: that things can easily get convoluted and less efficient if we prioritize one particular device—any device—over others. For this reason, focusing on the CSS in its own right, always mindful of what is the default setting and what’s an exception, seems like the natural next step. I’ve started noticing small simplifications in my own CSS, as well as other developers’, and that testing and maintenance work is also a bit more simplified and productive.

In general, simplifying CSS rule creation whenever we can is ultimately a cleaner approach than going around in circles of overrides. But whichever methodology you choose, it needs to suit the project. Mobile-first may—or may not—turn out to be the best choice for what’s involved, but first you need to solidly understand the trade-offs you’re stepping into.

Personalization Pyramid: A Framework for Designing with User Data

As a UX professional in today’s data-driven landscape, it’s increasingly likely that you’ve been asked to design a personalized digital experience, whether it’s a public website, user portal, or native application. Yet while there continues to be no shortage of marketing hype around personalization platforms, we still have very few standardized approaches for implementing personalized UX.

That’s where we come in. After completing dozens of personalization projects over the past few years, we gave ourselves a goal: could you create a holistic personalization framework specifically for UX practitioners? The Personalization Pyramid is a designer-centric model for standing up human-centered personalization programs, spanning data, segmentation, content delivery, and overall goals. By using this approach, you will be able to understand the core components of a contemporary, UX-driven personalization program (or at the very least know enough to get started).

Getting Started

For the sake of this article, we’ll assume you’re already familiar with the basics of digital personalization. A good overview can be found here: Website Personalization Planning. While UX projects in this area can take on many different forms, they often stem from similar starting points.

Common scenarios for starting a personalization project:

- Your organization or client purchased a content management system (CMS) or marketing automation platform (MAP) or related technology that supports personalization

- The CMO, CDO, or CIO has identified personalization as a goal

- Customer data is disjointed or ambiguous

- You are running some isolated targeting campaigns or A/B testing

- Stakeholders disagree on personalization approach

- Mandate of customer privacy rules (e.g. GDPR) requires revisiting existing user targeting practices

Regardless of where you begin, a successful personalization program will require the same core building blocks. We’ve captured these as the “levels” on the pyramid. Whether you are a UX designer, researcher, or strategist, understanding the core components can help make your contribution successful.

From top to bottom, the levels include:

- North Star: What larger strategic objective is driving the personalization program?

- Goals: What are the specific, measurable outcomes of the program?

- Touchpoints: Where will the personalized experience be served?

- Contexts and Campaigns: What personalization content will the user see?

- User Segments: What constitutes a unique, usable audience?

- Actionable Data: What reliable and authoritative data is captured by our technical platform to drive personalization?

- Raw Data: What wider set of data is conceivably available (already in our setting) allowing you to personalize?

We’ll go through each of these levels in turn. To help make this actionable, we created an accompanying deck of cards to illustrate specific examples from each level. We’ve found them helpful in personalization brainstorming sessions, and will include examples for you here.

Starting at the Top

The components of the pyramid are as follows:

North Star

A north star is what you are aiming for overall with your personalization program (big or small). The North Star defines the (one) overall mission of the personalization program. What do you wish to accomplish? North Stars cast a shadow. The bigger the star, the bigger the shadow. Example of North Starts might include:

- Function: Personalize based on basic user inputs. Examples: “Raw” notifications, basic search results, system user settings and configuration options, general customization, basic optimizations

- Feature: Self-contained personalization componentry. Examples: “Cooked” notifications, advanced optimizations (geolocation), basic dynamic messaging, customized modules, automations, recommenders

- Experience: Personalized user experiences across multiple interactions and user flows. Examples: Email campaigns, landing pages, advanced messaging (i.e. C2C chat) or conversational interfaces, larger user flows and content-intensive optimizations (localization).

- Product: Highly differentiating personalized product experiences. Examples: Standalone, branded experiences with personalization at their core, like the “algotorial” playlists by Spotify such as Discover Weekly.

Goals

As in any good UX design, personalization can help accelerate designing with customer intentions. Goals are the tactical and measurable metrics that will prove the overall program is successful. A good place to start is with your current analytics and measurement program and metrics you can benchmark against. In some cases, new goals may be appropriate. The key thing to remember is that personalization itself is not a goal, rather it is a means to an end. Common goals include:

- Conversion

- Time on task

- Net promoter score (NPS)

- Customer satisfaction

Touchpoints

Touchpoints are where the personalization happens. As a UX designer, this will be one of your largest areas of responsibility. The touchpoints available to you will depend on how your personalization and associated technology capabilities are instrumented, and should be rooted in improving a user’s experience at a particular point in the journey. Touchpoints can be multi-device (mobile, in-store, website) but also more granular (web banner, web pop-up etc.). Here are some examples:

Channel-level Touchpoints

- Email: Role

- Email: Time of open

- In-store display (JSON endpoint)

- Native app

- Search

Wireframe-level Touchpoints

- Web overlay

- Web alert bar

- Web banner

- Web content block

- Web menu

If you’re designing for web interfaces, for example, you will likely need to include personalized “zones” in your wireframes. The content for these can be presented programmatically in touchpoints based on our next step, contexts and campaigns.

Source: “Essential Guide to End-to-End Personaliztion” by Kibo.

Contexts and Campaigns

Once you’ve outlined some touchpoints, you can consider the actual personalized content a user will receive. Many personalization tools will refer to these as “campaigns” (so, for example, a campaign on a web banner for new visitors to the website). These will programmatically be shown at certain touchpoints to certain user segments, as defined by user data. At this stage, we find it helpful to consider two separate models: a context model and a content model. The context helps you consider the level of engagement of the user at the personalization moment, for example a user casually browsing information vs. doing a deep-dive. Think of it in terms of information retrieval behaviors. The content model can then help you determine what type of personalization to serve based on the context (for example, an “Enrich” campaign that shows related articles may be a suitable supplement to extant content).

Personalization Context Model:

- Browse

- Skim

- Nudge

- Feast

Personalization Content Model:

- Alert

- Make Easier

- Cross-Sell

- Enrich

We’ve written extensively about each of these models elsewhere, so if you’d like to read more you can check out Colin’s Personalization Content Model and Jeff’s Personalization Context Model.

User Segments

User segments can be created prescriptively or adaptively, based on user research (e.g. via rules and logic tied to set user behaviors or via A/B testing). At a minimum you will likely need to consider how to treat the unknown or first-time visitor, the guest or returning visitor for whom you may have a stateful cookie (or equivalent post-cookie identifier), or the authenticated visitor who is logged in. Here are some examples from the personalization pyramid:

- Unknown

- Guest

- Authenticated

- Default

- Referred

- Role

- Cohort

- Unique ID

Actionable Data

Every organization with any digital presence has data. It’s a matter of asking what data you can ethically collect on users, its inherent reliability and value, as to how can you use it (sometimes known as “data activation.”) Fortunately, the tide is turning to first-party data: a recent study by Twilio estimates some 80% of businesses are using at least some type of first-party data to personalize the customer experience.

First-party data represents multiple advantages on the UX front, including being relatively simple to collect, more likely to be accurate, and less susceptible to the “creep factor” of third-party data. So a key part of your UX strategy should be to determine what the best form of data collection is on your audiences. Here are some examples:

There is a progression of profiling when it comes to recognizing and making decisioning about different audiences and their signals. It tends to move towards more granular constructs about smaller and smaller cohorts of users as time and confidence and data volume grow.

While some combination of implicit / explicit data is generally a prerequisite for any implementation (more commonly referred to as first party and third-party data) ML efforts are typically not cost-effective directly out of the box. This is because a strong data backbone and content repository is a prerequisite for optimization. But these approaches should be considered as part of the larger roadmap and may indeed help accelerate the organization’s overall progress. Typically at this point you will partner with key stakeholders and product owners to design a profiling model. The profiling model includes defining approach to configuring profiles, profile keys, profile cards and pattern cards. A multi-faceted approach to profiling which makes it scalable.

Pulling it Together

While the cards comprise the starting point to an inventory of sorts (we provide blanks for you to tailor your own), a set of potential levers and motivations for the style of personalization activities you aspire to deliver, they are more valuable when thought of in a grouping.

In assembling a card “hand”, one can begin to trace the entire trajectory from leadership focus down through a strategic and tactical execution. It is also at the heart of the way both co-authors have conducted workshops in assembling a program backlog—which is a fine subject for another article.

In the meantime, what is important to note is that each colored class of card is helpful to survey in understanding the range of choices potentially at your disposal, it is threading through and making concrete decisions about for whom this decisioning will be made: where, when, and how.

Lay Down Your Cards

Any sustainable personalization strategy must consider near, mid and long-term goals. Even with the leading CMS platforms like Sitecore and Adobe or the most exciting composable CMS DXP out there, there is simply no “easy button” wherein a personalization program can be stood up and immediately view meaningful results. That said, there is a common grammar to all personalization activities, just like every sentence has nouns and verbs. These cards attempt to map that territory.

Humility: An Essential Value

Humility, a designer’s essential value—that has a nice ring to it. What about humility, an office manager’s essential value? Or a dentist’s? Or a librarian’s? They all sound great. When humility is our guiding light, the path is always open for fulfillment, evolution, connection, and engagement. In this chapter, we’re going to talk about why.

That said, this is a book for designers, and to that end, I’d like to start with a story—well, a journey, really. It’s a personal one, and I’m going to make myself a bit vulnerable along the way. I call it:

The Tale of Justin’s Preposterous Pate

When I was coming out of art school, a long-haired, goateed neophyte, print was a known quantity to me; design on the web, however, was rife with complexities to navigate and discover, a problem to be solved. Though I had been formally trained in graphic design, typography, and layout, what fascinated me was how these traditional skills might be applied to a fledgling digital landscape. This theme would ultimately shape the rest of my career.

So rather than graduate and go into print like many of my friends, I devoured HTML and JavaScript books into the wee hours of the morning and taught myself how to code during my senior year. I wanted—nay, needed—to better understand the underlying implications of what my design decisions would mean once rendered in a browser.

The late ’90s and early 2000s were the so-called “Wild West” of web design. Designers at the time were all figuring out how to apply design and visual communication to the digital landscape. What were the rules? How could we break them and still engage, entertain, and convey information? At a more macro level, how could my values, inclusive of humility, respect, and connection, align in tandem with that? I was hungry to find out.

Though I’m talking about a different era, those are timeless considerations between non-career interactions and the world of design. What are your core passions, or values, that transcend medium? It’s essentially the same concept we discussed earlier on the direct parallels between what fulfills you, agnostic of the tangible or digital realms; the core themes are all the same.

First within tables, animated GIFs, Flash, then with Web Standards, divs, and CSS, there was personality, raw unbridled creativity, and unique means of presentment that often defied any semblance of a visible grid. Splash screens and “browser requirement” pages aplenty. Usability and accessibility were typically victims of such a creation, but such paramount facets of any digital design were largely (and, in hindsight, unfairly) disregarded at the expense of experimentation.

For example, this iteration of my personal portfolio site (“the pseudoroom”) from that era was experimental, if not a bit heavy- handed, in the visual communication of the concept of a living sketchbook. Very skeuomorphic. I collaborated with fellow designer and dear friend Marc Clancy (now a co-founder of the creative project organizing app Milanote) on this one, where we’d first sketch and then pass a Photoshop file back and forth to trick things out and play with varied user interactions. Then, I’d break it down and code it into a digital layout.

Along with design folio pieces, the site also offered free downloads for Mac OS customizations: desktop wallpapers that were effectively design experimentation, custom-designed typefaces, and desktop icons.

From around the same time, GUI Galaxy was a design, pixel art, and Mac-centric news portal some graphic designer friends and I conceived, designed, developed, and deployed.

Design news portals were incredibly popular during this period, featuring (what would now be considered) Tweet-size, small-format snippets of pertinent news from the categories I previously mentioned. If you took Twitter, curated it to a few categories, and wrapped it in a custom-branded experience, you’d have a design news portal from the late 90s / early 2000s.

We as designers had evolved and created a bandwidth-sensitive, web standards award-winning, much more accessibility-conscious website. Still ripe with experimentation, yet more mindful of equitable engagement. You can see a couple of content panes here, noting general news (tech, design) and Mac-centric news below. We also offered many of the custom downloads I cited before as present on my folio site but branded and themed to GUI Galaxy.

The site’s backbone was a homegrown CMS, with the presentation layer consisting of global design + illustration + news author collaboration. And the collaboration effort here, in addition to experimentation on a ‘brand’ and content delivery, was hitting my core. We were designing something bigger than any single one of us and connecting with a global audience.

Collaboration and connection transcend medium in their impact, immensely fulfilling me as a designer.

Now, why am I taking you down this trip of design memory lane? Two reasons.

First, there’s a reason for the nostalgia for that design era (the “Wild West” era, as I called it earlier): the inherent exploration, personality, and creativity that saturated many design portals and personal portfolio sites. Ultra-finely detailed pixel art UI, custom illustration, bespoke vector graphics, all underpinned by a strong design community.

Today’s web design has been in a period of stagnation. I suspect there’s a strong chance you’ve seen a site whose structure looks something like this: a hero image / banner with text overlaid, perhaps with a lovely rotating carousel of images (laying the snark on heavy there), a call to action, and three columns of sub-content directly beneath. Maybe an icon library is employed with selections that vaguely relate to their respective content.

Design, as it’s applied to the digital landscape, is in dire need of thoughtful layout, typography, and visual engagement that goes hand-in-hand with all the modern considerations we now know are paramount: usability. Accessibility. Load times and bandwidth- sensitive content delivery. A responsive presentation that meets human beings wherever they’re engaging from. We must be mindful of, and respectful toward, those concerns—but not at the expense of creativity of visual communication or via replicating cookie-cutter layouts.

Pixel Problems

Websites during this period were often designed and built on Macs whose OS and desktops looked something like this. This is Mac OS 7.5, but 8 and 9 weren’t that different.

Desktop icons fascinated me: how could any single one, at any given point, stand out to get my attention? In this example, the user’s desktop is tidy, but think of a more realistic example with icon pandemonium. Or, say an icon was part of a larger system grouping (fonts, extensions, control panels)—how did it also maintain cohesion amongst a group?

These were 32 x 32 pixel creations, utilizing a 256-color palette, designed pixel-by-pixel as mini mosaics. To me, this was the embodiment of digital visual communication under such ridiculous constraints. And often, ridiculous restrictions can yield the purification of concept and theme.

So I began to research and do my homework. I was a student of this new medium, hungry to dissect, process, discover, and make it my own.

Expanding upon the notion of exploration, I wanted to see how I could push the limits of a 32×32 pixel grid with that 256-color palette. Those ridiculous constraints forced a clarity of concept and presentation that I found incredibly appealing. The digital gauntlet had been tossed, and that challenge fueled me. And so, in my dorm room into the wee hours of the morning, I toiled away, bringing conceptual sketches into mini mosaic fruition.

These are some of my creations, utilizing the only tool available at the time to create icons called ResEdit. ResEdit was a clunky, built-in Mac OS utility not really made for exactly what we were using it for. At the core of all of this work: Research. Challenge. Problem- solving. Again, these core connection-based values are agnostic of medium.

There’s one more design portal I want to talk about, which also serves as the second reason for my story to bring this all together.

This is K10k, short for Kaliber 1000. K10k was founded in 1998 by Michael Schmidt and Toke Nygaard, and was the design news portal on the web during this period. With its pixel art-fueled presentation, ultra-focused care given to every facet and detail, and with many of the more influential designers of the time who were invited to be news authors on the site, well… it was the place to be, my friend. With respect where respect is due, GUI Galaxy’s concept was inspired by what these folks were doing.

For my part, the combination of my web design work and pixel art exploration began to get me some notoriety in the design scene. Eventually, K10k noticed and added me as one of their very select group of news authors to contribute content to the site.

Amongst my personal work and side projects—and now with this inclusion—in the design community, this put me on the map. My design work also began to be published in various printed collections, in magazines domestically and overseas, and featured on other design news portals. With that degree of success while in my early twenties, something else happened:

I evolved—devolved, really—into a colossal asshole (and in just about a year out of art school, no less). The press and the praise became what fulfilled me, and they went straight to my head. They inflated my ego. I actually felt somewhat superior to my fellow designers.

The casualties? My design stagnated. Its evolution—my evolution— stagnated.

I felt so supremely confident in my abilities that I effectively stopped researching and discovering. When previously sketching concepts or iterating ideas in lead was my automatic step one, I instead leaped right into Photoshop. I drew my inspiration from the smallest of sources (and with blinders on). Any critique of my work from my peers was often vehemently dismissed. The most tragic loss: I had lost touch with my values.

My ego almost cost me some of my friendships and burgeoning professional relationships. I was toxic in talking about design and in collaboration. But thankfully, those same friends gave me a priceless gift: candor. They called me out on my unhealthy behavior.

Admittedly, it was a gift I initially did not accept but ultimately was able to deeply reflect upon. I was soon able to accept, and process, and course correct. The realization laid me low, but the re-awakening was essential. I let go of the “reward” of adulation and re-centered upon what stoked the fire for me in art school. Most importantly: I got back to my core values.

Always Students

Following that short-term regression, I was able to push forward in my personal design and career. And I could self-reflect as I got older to facilitate further growth and course correction as needed.

As an example, let’s talk about the Large Hadron Collider. The LHC was designed “to help answer some of the fundamental open questions in physics, which concern the basic laws governing the interactions and forces among the elementary objects, the deep structure of space and time, and in particular the interrelation between quantum mechanics and general relativity.” Thanks, Wikipedia.

Around fifteen years ago, in one of my earlier professional roles, I designed the interface for the application that generated the LHC’s particle collision diagrams. These diagrams are the rendering of what’s actually happening inside the Collider during any given particle collision event and are often considered works of art unto themselves.

Designing the interface for this application was a fascinating process for me, in that I worked with Fermilab physicists to understand what the application was trying to achieve, but also how the physicists themselves would be using it. To that end, in this role,

I cut my teeth on usability testing, working with the Fermilab team to iterate and improve the interface. How they spoke and what they spoke about was like an alien language to me. And by making myself humble and working under the mindset that I was but a student, I made myself available to be a part of their world to generate that vital connection.

I also had my first ethnographic observation experience: going to the Fermilab location and observing how the physicists used the tool in their actual environment, on their actual terminals. For example, one takeaway was that due to the level of ambient light-driven contrast within the facility, the data columns ended up using white text on a dark gray background instead of black text-on-white. This enabled them to pore over reams of data during the day and ease their eye strain. And Fermilab and CERN are government entities with rigorous accessibility standards, so my knowledge in that realm also grew. The barrier-free design was another essential form of connection.

So to those core drivers of my visual problem-solving soul and ultimate fulfillment: discovery, exposure to new media, observation, human connection, and evolution. What opened the door for those values was me checking my ego before I walked through it.

An evergreen willingness to listen, learn, understand, grow, evolve, and connect yields our best work. In particular, I want to focus on the words ‘grow’ and ‘evolve’ in that statement. If we are always students of our craft, we are also continually making ourselves available to evolve. Yes, we have years of applicable design study under our belt. Or the focused lab sessions from a UX bootcamp. Or the monogrammed portfolio of our work. Or, ultimately, decades of a career behind us.

But all that said: experience does not equal “expert.”

As soon as we close our minds via an inner monologue of ‘knowing it all’ or branding ourselves a “#thoughtleader” on social media, the designer we are is our final form. The designer we can be will never exist.

I am a creative.

I am a creative. What I do is alchemy. It is a mystery. I do not so much do it, as let it be done through me.

I am a creative. Not all creative people like this label. Not all see themselves this way. Some creative people see science in what they do. That is their truth, and I respect it. Maybe I even envy them, a little. But my process is different—my being is different.

Apologizing and qualifying in advance is a distraction. That’s what my brain does to sabotage me. I set it aside for now. I can come back later to apologize and qualify. After I’ve said what I came to say. Which is hard enough.

Except when it is easy and flows like a river of wine.

Sometimes it does come that way. Sometimes what I need to create comes in an instant. I have learned not to say it at that moment, because if you admit that sometimes the idea just comes and it is the best idea and you know it is the best idea, they think you don’t work hard enough.

Sometimes I work and work and work until the idea comes. Sometimes it comes instantly and I don’t tell anyone for three days. Sometimes I’m so excited by the idea that came instantly that I blurt it out, can’t help myself. Like a boy who found a prize in his Cracker Jacks. Sometimes I get away with this. Sometimes other people agree: yes, that is the best idea. Most times they don’t and I regret having given way to enthusiasm.

Enthusiasm is best saved for the meeting where it will make a difference. Not the casual get-together that precedes that meeting by two other meetings. Nobody knows why we have all these meetings. We keep saying we’re doing away with them, but then just finding other ways to have them. Sometimes they are even good. But other times they are a distraction from the actual work. The proportion between when meetings are useful, and when they are a pitiful distraction, varies, depending on what you do and where you do it. And who you are and how you do it. Again I digress. I am a creative. That is the theme.

Sometimes many hours of hard and patient work produce something that is barely serviceable. Sometimes I have to accept that and move on to the next project.

Don’t ask about process. I am a creative.

I am a creative. I don’t control my dreams. And I don’t control my best ideas.

I can hammer away, surround myself with facts or images, and sometimes that works. I can go for a walk, and sometimes that works. I can be making dinner and there’s a Eureka having nothing to do with sizzling oil and bubbling pots. Often I know what to do the instant I wake up. And then, almost as often, as I become conscious and part of the world again, the idea that would have saved me turns to vanishing dust in a mindless wind of oblivion. For creativity, I believe, comes from that other world. The one we enter in dreams, and perhaps, before birth and after death. But that’s for poets to wonder, and I am not a poet. I am a creative. And it’s for theologians to mass armies about in their creative world that they insist is real. But that is another digression. And a depressing one. Maybe on a much more important topic than whether I am a creative or not. But still a digression from what I came here to say.

Sometimes the process is avoidance. And agony. You know the cliché about the tortured artist? It’s true, even when the artist (and let’s put that noun in quotes) is trying to write a soft drink jingle, a callback in a tired sitcom, a budget request.

Some people who hate being called creative may be closeted creatives, but that’s between them and their gods. No offense meant. Your truth is true, too. But mine is for me.

Creatives recognize creatives.

Creatives recognize creatives like queers recognize queers, like real rappers recognize real rappers, like cons know cons. Creatives feel massive respect for creatives. We love, honor, emulate, and practically deify the great ones. To deify any human is, of course, a tragic mistake. We have been warned. We know better. We know people are just people. They squabble, they are lonely, they regret their most important decisions, they are poor and hungry, they can be cruel, they can be just as stupid as we can, because, like us, they are clay. But. But. But they make this amazing thing. They birth something that did not exist before them, and could not exist without them. They are the mothers of ideas. And I suppose, since it’s just lying there, I have to add that they are the mothers of invention. Ba dum bum! OK, that’s done. Continue.

Creatives belittle our own small achievements, because we compare them to those of the great ones. Beautiful animation! Well, I’m no Miyazaki. Now THAT is greatness. That is greatness straight from the mind of God. This half-starved little thing that I made? It more or less fell off the back of the turnip truck. And the turnips weren’t even fresh.

Creatives knows that, at best, they are Salieri. Even the creatives who are Mozart believe that.

I am a creative. I haven’t worked in advertising in 30 years, but in my nightmares, it’s my former creative directors who judge me. And they are right to do so. I am too lazy, too facile, and when it really counts, my mind goes blank. There is no pill for creative dysfunction.