To Ignite a Personalization Practice, Run this Prepersonalization Workshop

Picture this. You’ve joined a squad at your company that’s designing new product features with an emphasis on automation or AI. Or your company has just implemented a personalization engine. Either way, you’re designing with data. Now what? When it comes to designing for personalization, there are many cautionary tales, no overnight successes, and few guides for the perplexed.

Between the fantasy of getting it right and the fear of it going wrong—like when we encounter “persofails” in the vein of a company repeatedly imploring everyday consumers to buy additional toilet seats—the personalization gap is real. It’s an especially confounding place to be a digital professional without a map, a compass, or a plan.

For those of you venturing into personalization, there’s no Lonely Planet and few tour guides because effective personalization is so specific to each organization’s talent, technology, and market position.

But you can ensure that your team has packed its bags sensibly.

There’s a DIY formula to increase your chances for success. At minimum, you’ll defuse your boss’s irrational exuberance. Before the party you’ll need to effectively prepare.

We call it prepersonalization.

Behind the music

Consider Spotify’s DJ feature, which debuted this past year.

We’re used to seeing the polished final result of a personalization feature. Before the year-end award, the making-of backstory, or the behind-the-scenes victory lap, a personalized feature had to be conceived, budgeted, and prioritized. Before any personalization feature goes live in your product or service, it lives amid a backlog of worthy ideas for expressing customer experiences more dynamically.

So how do you know where to place your personalization bets? How do you design consistent interactions that won’t trip up users or—worse—breed mistrust? We’ve found that for many budgeted programs to justify their ongoing investments, they first needed one or more workshops to convene key stakeholders and internal customers of the technology. Make yours count.

From Big Tech to fledgling startups, we’ve seen the same evolution up close with our clients. In our experiences with working on small and large personalization efforts, a program’s ultimate track record—and its ability to weather tough questions, work steadily toward shared answers, and organize its design and technology efforts—turns on how effectively these prepersonalization activities play out.

Time and again, we’ve seen effective workshops separate future success stories from unsuccessful efforts, saving countless time, resources, and collective well-being in the process.

A personalization practice involves a multiyear effort of testing and feature development. It’s not a switch-flip moment in your tech stack. It’s best managed as a backlog that often evolves through three steps:

- customer experience optimization (CXO, also known as A/B testing or experimentation)

- always-on automations (whether rules-based or machine-generated)

- mature features or standalone product development (such as Spotify’s DJ experience)

This is why we created our progressive personalization framework and why we’re field-testing an accompanying deck of cards: we believe that there’s a base grammar, a set of “nouns and verbs” that your organization can use to design experiences that are customized, personalized, or automated. You won’t need these cards. But we strongly recommend that you create something similar, whether that might be digital or physical.

Set your kitchen timer

How long does it take to cook up a prepersonalization workshop? The surrounding assessment activities that we recommend including can (and often do) span weeks. For the core workshop, we recommend aiming for two to three days. Here’s a summary of our broader approach along with details on the essential first-day activities.

The full arc of the wider workshop is threefold:

- Kickstart: This sets the terms of engagement as you focus on the opportunity as well as the readiness and drive of your team and your leadership. .

- Plan your work: This is the heart of the card-based workshop activities where you specify a plan of attack and the scope of work.

- Work your plan: This phase is all about creating a competitive environment for team participants to individually pitch their own pilots that each contain a proof-of-concept project, its business case, and its operating model.

Give yourself at least a day, split into two large time blocks, to power through a concentrated version of those first two phases.

Kickstart: Whet your appetite

We call the first lesson the “landscape of connected experience.” It explores the personalization possibilities in your organization. A connected experience, in our parlance, is any UX requiring the orchestration of multiple systems of record on the backend. This could be a content-management system combined with a marketing-automation platform. It could be a digital-asset manager combined with a customer-data platform.

Spark conversation by naming consumer examples and business-to-business examples of connected experience interactions that you admire, find familiar, or even dislike. This should cover a representative range of personalization patterns, including automated app-based interactions (such as onboarding sequences or wizards), notifications, and recommenders. We have a catalog of these in the cards. Here’s a list of 142 different interactions to jog your thinking.

This is all about setting the table. What are the possible paths for the practice in your organization? If you want a broader view, here’s a long-form primer and a strategic framework.

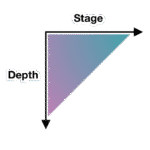

Assess each example that you discuss for its complexity and the level of effort that you estimate that it would take for your team to deliver that feature (or something similar). In our cards, we divide connected experiences into five levels: functions, features, experiences, complete products, and portfolios. Size your own build here. This will help to focus the conversation on the merits of ongoing investment as well as the gap between what you deliver today and what you want to deliver in the future.

Next, have your team plot each idea on the following 2×2 grid, which lays out the four enduring arguments for a personalized experience. This is critical because it emphasizes how personalization can not only help your external customers but also affect your own ways of working. It’s also a reminder (which is why we used the word argument earlier) of the broader effort beyond these tactical interventions.

Each team member should vote on where they see your product or service putting its emphasis. Naturally, you can’t prioritize all of them. The intention here is to flesh out how different departments may view their own upsides to the effort, which can vary from one to the next. Documenting your desired outcomes lets you know how the team internally aligns across representatives from different departments or functional areas.

The third and final kickstart activity is about naming your personalization gap. Is your customer journey well documented? Will data and privacy compliance be too big of a challenge? Do you have content metadata needs that you have to address? (We’re pretty sure that you do: it’s just a matter of recognizing the relative size of that need and its remedy.) In our cards, we’ve noted a number of program risks, including common team dispositions. Our Detractor card, for example, lists six stakeholder behaviors that hinder progress.

Effectively collaborating and managing expectations is critical to your success. Consider the potential barriers to your future progress. Press the participants to name specific steps to overcome or mitigate those barriers in your organization. As studies have shown, personalization efforts face many common barriers.

At this point, you’ve hopefully discussed sample interactions, emphasized a key area of benefit, and flagged key gaps? Good—you’re ready to continue.

Hit that test kitchen

Next, let’s look at what you’ll need to bring your personalization recipes to life. Personalization engines, which are robust software suites for automating and expressing dynamic content, can intimidate new customers. Their capabilities are sweeping and powerful, and they present broad options for how your organization can conduct its activities. This presents the question: Where do you begin when you’re configuring a connected experience?

What’s important here is to avoid treating the installed software like it were a dream kitchen from some fantasy remodeling project (as one of our client executives memorably put it). These software engines are more like test kitchens where your team can begin devising, tasting, and refining the snacks and meals that will become a part of your personalization program’s regularly evolving menu.

The ultimate menu of the prioritized backlog will come together over the course of the workshop. And creating “dishes” is the way that you’ll have individual team stakeholders construct personalized interactions that serve their needs or the needs of others.

The dishes will come from recipes, and those recipes have set ingredients.

Verify your ingredients

Like a good product manager, you’ll make sure—andyou’ll validate with the right stakeholders present—that you have all the ingredients on hand to cook up your desired interaction (or that you can work out what needs to be added to your pantry). These ingredients include the audience that you’re targeting, content and design elements, the context for the interaction, and your measure for how it’ll come together.

This isn’t just about discovering requirements. Documenting your personalizations as a series of if-then statements lets the team:

- compare findings toward a unified approach for developing features, not unlike when artists paint with the same palette;

- specify a consistent set of interactions that users find uniform or familiar;

- and develop parity across performance measurements and key performance indicators too.

This helps you streamline your designs and your technical efforts while you deliver a shared palette of core motifs of your personalized or automated experience.

Compose your recipe

What ingredients are important to you? Think of a who-what-when-why construct:

- Who are your key audience segments or groups?

- What kind of content will you give them, in what design elements, and under what circumstances?

- And for which business and user benefits?

We first developed these cards and card categories five years ago. We regularly play-test their fit with conference audiences and clients. And we still encounter new possibilities. But they all follow an underlying who-what-when-why logic.

Here are three examples for a subscription-based reading app, which you can generally follow along with right to left in the cards in the accompanying photo below.

- Nurture personalization: When a guest or an unknown visitor interacts with a product title, a banner or alert bar appears that makes it easier for them to encounter a related title they may want to read, saving them time.

- Welcome automation: When there’s a newly registered user, an email is generated to call out the breadth of the content catalog and to make them a happier subscriber.

- Winback automation: Before their subscription lapses or after a recent failed renewal, a user is sent an email that gives them a promotional offer to suggest that they reconsider renewing or to remind them to renew.

A useful preworkshop activity may be to think through a first draft of what these cards might be for your organization, although we’ve also found that this process sometimes flows best through cocreating the recipes themselves. Start with a set of blank cards, and begin labeling and grouping them through the design process, eventually distilling them to a refined subset of highly useful candidate cards.

You can think of the later stages of the workshop as moving from recipes toward a cookbook in focus—like a more nuanced customer-journey mapping. Individual “cooks” will pitch their recipes to the team, using a common jobs-to-be-done format so that measurability and results are baked in, and from there, the resulting collection will be prioritized for finished design and delivery to production.

Better kitchens require better architecture

Simplifying a customer experience is a complicated effort for those who are inside delivering it. Beware anyone who says otherwise. With that being said, “Complicated problems can be hard to solve, but they are addressable with rules and recipes.”

When personalization becomes a laugh line, it’s because a team is overfitting: they aren’t designing with their best data. Like a sparse pantry, every organization has metadata debt to go along with its technical debt, and this creates a drag on personalization effectiveness. Your AI’s output quality, for example, is indeed limited by your IA. Spotify’s poster-child prowess today was unfathomable before they acquired a seemingly modest metadata startup that now powers its underlying information architecture.

You can definitely stand the heat…

Personalization technology opens a doorway into a confounding ocean of possible designs. Only a disciplined and highly collaborative approach will bring about the necessary focus and intention to succeed. So banish the dream kitchen. Instead, hit the test kitchen to save time, preserve job satisfaction and security, and safely dispense with the fanciful ideas that originate upstairs of the doers in your organization. There are meals to serve and mouths to feed.

This workshop framework gives you a fighting shot at lasting success as well as sound beginnings. Wiring up your information layer isn’t an overnight affair. But if you use the same cookbook and shared recipes, you’ll have solid footing for success. We designed these activities to make your organization’s needs concrete and clear, long before the hazards pile up.

While there are associated costs toward investing in this kind of technology and product design, your ability to size up and confront your unique situation and your digital capabilities is time well spent. Don’t squander it. The proof, as they say, is in the pudding.

User Research Is Storytelling

Ever since I was a boy, I’ve been fascinated with movies. I loved the characters and the excitement—but most of all the stories. I wanted to be an actor. And I believed that I’d get to do the things that Indiana Jones did and go on exciting adventures. I even dreamed up ideas for movies that my friends and I could make and star in. But they never went any further. I did, however, end up working in user experience (UX). Now, I realize that there’s an element of theater to UX—I hadn’t really considered it before, but user research is storytelling. And to get the most out of user research, you need to tell a good story where you bring stakeholders—the product team and decision makers—along and get them interested in learning more.

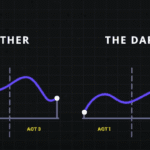

Think of your favorite movie. More than likely it follows a three-act structure that’s commonly seen in storytelling: the setup, the conflict, and the resolution. The first act shows what exists today, and it helps you get to know the characters and the challenges and problems that they face. Act two introduces the conflict, where the action is. Here, problems grow or get worse. And the third and final act is the resolution. This is where the issues are resolved and the characters learn and change. I believe that this structure is also a great way to think about user research, and I think that it can be especially helpful in explaining user research to others.

Use storytelling as a structure to do research

It’s sad to say, but many have come to see research as being expendable. If budgets or timelines are tight, research tends to be one of the first things to go. Instead of investing in research, some product managers rely on designers or—worse—their own opinion to make the “right” choices for users based on their experience or accepted best practices. That may get teams some of the way, but that approach can so easily miss out on solving users’ real problems. To remain user-centered, this is something we should avoid. User research elevates design. It keeps it on track, pointing to problems and opportunities. Being aware of the issues with your product and reacting to them can help you stay ahead of your competitors.

In the three-act structure, each act corresponds to a part of the process, and each part is critical to telling the whole story. Let’s look at the different acts and how they align with user research.

Act one: setup

The setup is all about understanding the background, and that’s where foundational research comes in. Foundational research (also called generative, discovery, or initial research) helps you understand users and identify their problems. You’re learning about what exists today, the challenges users have, and how the challenges affect them—just like in the movies. To do foundational research, you can conduct contextual inquiries or diary studies (or both!), which can help you start to identify problems as well as opportunities. It doesn’t need to be a huge investment in time or money.

Erika Hall writes about minimum viable ethnography, which can be as simple as spending 15 minutes with a user and asking them one thing: “‘Walk me through your day yesterday.’ That’s it. Present that one request. Shut up and listen to them for 15 minutes. Do your damndest to keep yourself and your interests out of it. Bam, you’re doing ethnography.” According to Hall, “[This] will probably prove quite illuminating. In the highly unlikely case that you didn’t learn anything new or useful, carry on with enhanced confidence in your direction.”

This makes total sense to me. And I love that this makes user research so accessible. You don’t need to prepare a lot of documentation; you can just recruit participants and do it! This can yield a wealth of information about your users, and it’ll help you better understand them and what’s going on in their lives. That’s really what act one is all about: understanding where users are coming from.

Jared Spool talks about the importance of foundational research and how it should form the bulk of your research. If you can draw from any additional user data that you can get your hands on, such as surveys or analytics, that can supplement what you’ve heard in the foundational studies or even point to areas that need further investigation. Together, all this data paints a clearer picture of the state of things and all its shortcomings. And that’s the beginning of a compelling story. It’s the point in the plot where you realize that the main characters—or the users in this case—are facing challenges that they need to overcome. Like in the movies, this is where you start to build empathy for the characters and root for them to succeed. And hopefully stakeholders are now doing the same. Their sympathy may be with their business, which could be losing money because users can’t complete certain tasks. Or maybe they do empathize with users’ struggles. Either way, act one is your initial hook to get the stakeholders interested and invested.

Once stakeholders begin to understand the value of foundational research, that can open doors to more opportunities that involve users in the decision-making process. And that can guide product teams toward being more user-centered. This benefits everyone—users, the product, and stakeholders. It’s like winning an Oscar in movie terms—it often leads to your product being well received and successful. And this can be an incentive for stakeholders to repeat this process with other products. Storytelling is the key to this process, and knowing how to tell a good story is the only way to get stakeholders to really care about doing more research.

This brings us to act two, where you iteratively evaluate a design or concept to see whether it addresses the issues.

Act two: conflict

Act two is all about digging deeper into the problems that you identified in act one. This usually involves directional research, such as usability tests, where you assess a potential solution (such as a design) to see whether it addresses the issues that you found. The issues could include unmet needs or problems with a flow or process that’s tripping users up. Like act two in a movie, more issues will crop up along the way. It’s here that you learn more about the characters as they grow and develop through this act.

Usability tests should typically include around five participants according to Jakob Nielsen, who found that that number of users can usually identify most of the problems: “As you add more and more users, you learn less and less because you will keep seeing the same things again and again… After the fifth user, you are wasting your time by observing the same findings repeatedly but not learning much new.”

There are parallels with storytelling here too; if you try to tell a story with too many characters, the plot may get lost. Having fewer participants means that each user’s struggles will be more memorable and easier to relay to other stakeholders when talking about the research. This can help convey the issues that need to be addressed while also highlighting the value of doing the research in the first place.

Researchers have run usability tests in person for decades, but you can also conduct usability tests remotely using tools like Microsoft Teams, Zoom, or other teleconferencing software. This approach has become increasingly popular since the beginning of the pandemic, and it works well. You can think of in-person usability tests like going to a play and remote sessions as more like watching a movie. There are advantages and disadvantages to each. In-person usability research is a much richer experience. Stakeholders can experience the sessions with other stakeholders. You also get real-time reactions—including surprise, agreement, disagreement, and discussions about what they’re seeing. Much like going to a play, where audiences get to take in the stage, the costumes, the lighting, and the actors’ interactions, in-person research lets you see users up close, including their body language, how they interact with the moderator, and how the scene is set up.

If in-person usability testing is like watching a play—staged and controlled—then conducting usability testing in the field is like immersive theater where any two sessions might be very different from one another. You can take usability testing into the field by creating a replica of the space where users interact with the product and then conduct your research there. Or you can go out to meet users at their location to do your research. With either option, you get to see how things work in context, things come up that wouldn’t have in a lab environment—and conversion can shift in entirely different directions. As researchers, you have less control over how these sessions go, but this can sometimes help you understand users even better. Meeting users where they are can provide clues to the external forces that could be affecting how they use your product. In-person usability tests provide another level of detail that’s often missing from remote usability tests.

That’s not to say that the “movies”—remote sessions—aren’t a good option. Remote sessions can reach a wider audience. They allow a lot more stakeholders to be involved in the research and to see what’s going on. And they open the doors to a much wider geographical pool of users. But with any remote session there is the potential of time wasted if participants can’t log in or get their microphone working.

The benefit of usability testing, whether remote or in person, is that you get to see real users interact with the designs in real time, and you can ask them questions to understand their thought processes and grasp of the solution. This can help you not only identify problems but also glean why they’re problems in the first place. Furthermore, you can test hypotheses and gauge whether your thinking is correct. By the end of the sessions, you’ll have a much clearer picture of how usable the designs are and whether they work for their intended purposes. Act two is the heart of the story—where the excitement is—but there can be surprises too. This is equally true of usability tests. Often, participants will say unexpected things, which change the way that you look at things—and these twists in the story can move things in new directions.

Unfortunately, user research is sometimes seen as expendable. And too often usability testing is the only research process that some stakeholders think that they ever need. In fact, if the designs that you’re evaluating in the usability test aren’t grounded in a solid understanding of your users (foundational research), there’s not much to be gained by doing usability testing in the first place. That’s because you’re narrowing the focus of what you’re getting feedback on, without understanding the users’ needs. As a result, there’s no way of knowing whether the designs might solve a problem that users have. It’s only feedback on a particular design in the context of a usability test.

On the other hand, if you only do foundational research, while you might have set out to solve the right problem, you won’t know whether the thing that you’re building will actually solve that. This illustrates the importance of doing both foundational and directional research.

In act two, stakeholders will—hopefully—get to watch the story unfold in the user sessions, which creates the conflict and tension in the current design by surfacing their highs and lows. And in turn, this can help motivate stakeholders to address the issues that come up.

Act three: resolution

While the first two acts are about understanding the background and the tensions that can propel stakeholders into action, the third part is about resolving the problems from the first two acts. While it’s important to have an audience for the first two acts, it’s crucial that they stick around for the final act. That means the whole product team, including developers, UX practitioners, business analysts, delivery managers, product managers, and any other stakeholders that have a say in the next steps. It allows the whole team to hear users’ feedback together, ask questions, and discuss what’s possible within the project’s constraints. And it lets the UX research and design teams clarify, suggest alternatives, or give more context behind their decisions. So you can get everyone on the same page and get agreement on the way forward.

This act is mostly told in voiceover with some audience participation. The researcher is the narrator, who paints a picture of the issues and what the future of the product could look like given the things that the team has learned. They give the stakeholders their recommendations and their guidance on creating this vision.

Nancy Duarte in the Harvard Business Review offers an approach to structuring presentations that follow a persuasive story. “The most effective presenters use the same techniques as great storytellers: By reminding people of the status quo and then revealing the path to a better way, they set up a conflict that needs to be resolved,” writes Duarte. “That tension helps them persuade the audience to adopt a new mindset or behave differently.”

This type of structure aligns well with research results, and particularly results from usability tests. It provides evidence for “what is”—the problems that you’ve identified. And “what could be”—your recommendations on how to address them. And so on and so forth.

You can reinforce your recommendations with examples of things that competitors are doing that could address these issues or with examples where competitors are gaining an edge. Or they can be visual, like quick mockups of how a new design could look that solves a problem. These can help generate conversation and momentum. And this continues until the end of the session when you’ve wrapped everything up in the conclusion by summarizing the main issues and suggesting a way forward. This is the part where you reiterate the main themes or problems and what they mean for the product—the denouement of the story. This stage gives stakeholders the next steps and hopefully the momentum to take those steps!

While we are nearly at the end of this story, let’s reflect on the idea that user research is storytelling. All the elements of a good story are there in the three-act structure of user research:

- Act one: You meet the protagonists (the users) and the antagonists (the problems affecting users). This is the beginning of the plot. In act one, researchers might use methods including contextual inquiry, ethnography, diary studies, surveys, and analytics. The output of these methods can include personas, empathy maps, user journeys, and analytics dashboards.

- Act two: Next, there’s character development. There’s conflict and tension as the protagonists encounter problems and challenges, which they must overcome. In act two, researchers might use methods including usability testing, competitive benchmarking, and heuristics evaluation. The output of these can include usability findings reports, UX strategy documents, usability guidelines, and best practices.

- Act three: The protagonists triumph and you see what a better future looks like. In act three, researchers may use methods including presentation decks, storytelling, and digital media. The output of these can be: presentation decks, video clips, audio clips, and pictures.

The researcher has multiple roles: they’re the storyteller, the director, and the producer. The participants have a small role, but they are significant characters (in the research). And the stakeholders are the audience. But the most important thing is to get the story right and to use storytelling to tell users’ stories through research. By the end, the stakeholders should walk away with a purpose and an eagerness to resolve the product’s ills.

So the next time that you’re planning research with clients or you’re speaking to stakeholders about research that you’ve done, think about how you can weave in some storytelling. Ultimately, user research is a win-win for everyone, and you just need to get stakeholders interested in how the story ends.

From Beta to Bedrock: Build Products that Stick.

As a product builder over too many years to mention, I’ve lost count of the number of times I’ve seen promising ideas go from zero to hero in a few weeks, only to fizzle out within months.

Financial products, which is the field I work in, are no exception. With people’s real hard-earned money on the line, user expectations running high, and a crowded market, it’s tempting to throw as many features at the wall as possible and hope something sticks. But this approach is a recipe for disaster. Here’s why:

The pitfalls of feature-first development

When you start building a financial product from the ground up, or are migrating existing customer journeys from paper or telephony channels onto online banking or mobile apps, it’s easy to get caught up in the excitement of creating new features. You might think, “If I can just add one more thing that solves this particular user problem, they’ll love me!” But what happens when you inevitably hit a roadblock because the narcs (your security team!) don’t like it? When a hard-fought feature isn’t as popular as you thought, or it breaks due to unforeseen complexity?

This is where the concept of Minimum Viable Product (MVP) comes in. Jason Fried’s book Getting Real and his podcast Rework often touch on this idea, even if he doesn’t always call it that. An MVP is a product that provides just enough value to your users to keep them engaged, but not so much that it becomes overwhelming or difficult to maintain. It sounds like an easy concept but it requires a razor sharp eye, a ruthless edge and having the courage to stick by your opinion because it is easy to be seduced by “the Columbo Effect”… when there’s always “just one more thing…” that someone wants to add.

The problem with most finance apps, however, is that they often become a reflection of the internal politics of the business rather than an experience solely designed around the customer. This means that the focus is on delivering as many features and functionalities as possible to satisfy the needs and desires of competing internal departments, rather than providing a clear value proposition that is focused on what the people out there in the real world want. As a result, these products can very easily bloat to become a mixed bag of confusing, unrelated and ultimately unlovable customer experiences—a feature salad, you might say.

The importance of bedrock

So what’s a better approach? How can we build products that are stable, user-friendly, and—most importantly—stick?

That’s where the concept of “bedrock” comes in. Bedrock is the core element of your product that truly matters to users. It’s the fundamental building block that provides value and stays relevant over time.

In the world of retail banking, which is where I work, the bedrock has got to be in and around the regular servicing journeys. People open their current account once in a blue moon but they look at it every day. They sign up for a credit card every year or two, but they check their balance and pay their bill at least once a month.

Identifying the core tasks that people want to do and then relentlessly striving to make them easy to do, dependable, and trustworthy is where the gravy’s at.

But how do you get to bedrock? By focusing on the “MVP” approach, prioritizing simplicity, and iterating towards a clear value proposition. This means cutting out unnecessary features and focusing on delivering real value to your users.

It also means having some guts, because your colleagues might not always instantly share your vision to start with. And controversially, sometimes it can even mean making it clear to customers that you’re not going to come to their house and make their dinner. The occasional “opinionated user interface design” (i.e. clunky workaround for edge cases) might sometimes be what you need to use to test a concept or buy you space to work on something more important.

Practical strategies for building financial products that stick

So what are the key strategies I’ve learned from my own experience and research?

- Start with a clear “why”: What problem are you trying to solve? For whom? Make sure your mission is crystal clear before building anything. Make sure it aligns with your company’s objectives, too.

- Focus on a single, core feature and obsess on getting that right before moving on to something else: Resist the temptation to add too many features at once. Instead, choose one that delivers real value and iterate from there.

- Prioritize simplicity over complexity: Less is often more when it comes to financial products. Cut out unnecessary bells and whistles and keep the focus on what matters most.

- Embrace continuous iteration: Bedrock isn’t a fixed destination—it’s a dynamic process. Continuously gather user feedback, refine your product, and iterate towards that bedrock state.

- Stop, look and listen: Don’t just test your product as part of your delivery process—test it repeatedly in the field. Use it yourself. Run A/B tests. Gather user feedback. Talk to people who use it, and refine accordingly.

The bedrock paradox

There’s an interesting paradox at play here: building towards bedrock means sacrificing some short-term growth potential in favour of long-term stability. But the payoff is worth it—products built with a focus on bedrock will outlast and outperform their competitors, and deliver sustained value to users over time.

So, how do you start your journey towards bedrock? Take it one step at a time. Start by identifying those core elements that truly matter to your users. Focus on building and refining a single, powerful feature that delivers real value. And above all, test obsessively—for, in the words of Abraham Lincoln, Alan Kay, or Peter Drucker (whomever you believe!!), “The best way to predict the future is to create it.”

An Holistic Framework for Shared Design Leadership

Picture this: You’re in a meeting room at your tech company, and two people are having what looks like the same conversation about the same design problem. One is talking about whether the team has the right skills to tackle it. The other is diving deep into whether the solution actually solves the user’s problem. Same room, same problem, completely different lenses.

This is the beautiful, sometimes messy reality of having both a Design Manager and a Lead Designer on the same team. And if you’re wondering how to make this work without creating confusion, overlap, or the dreaded “too many cooks” scenario, you’re asking the right question.

The traditional answer has been to draw clean lines on an org chart. The Design Manager handles people, the Lead Designer handles craft. Problem solved, right? Except clean org charts are fantasy. In reality, both roles care deeply about team health, design quality, and shipping great work.

The magic happens when you embrace the overlap instead of fighting it—when you start thinking of your design org as a design organism.

The Anatomy of a Healthy Design Team

Here’s what I’ve learned from years of being on both sides of this equation: think of your design team as a living organism. The Design Manager tends to the mind (the psychological safety, the career growth, the team dynamics). The Lead Designer tends to the body (the craft skills, the design standards, the hands-on work that ships to users).

But just like mind and body aren’t completely separate systems, so, too, do these roles overlap in important ways. You can’t have a healthy person without both working in harmony. The trick is knowing where those overlaps are and how to navigate them gracefully.

When we look at how healthy teams actually function, three critical systems emerge. Each requires both roles to work together, but with one taking primary responsibility for keeping that system strong.

The Nervous System: People & Psychology

Primary caretaker: Design Manager

Supporting role: Lead Designer

The nervous system is all about signals, feedback, and psychological safety. When this system is healthy, information flows freely, people feel safe to take risks, and the team can adapt quickly to new challenges.

The Design Manager is the primary caretaker here. They’re monitoring the team’s psychological pulse, ensuring feedback loops are healthy, and creating the conditions for people to grow. They’re hosting career conversations, managing workload, and making sure no one burns out.

But the Lead Designer plays a crucial supporting role. They’re providing sensory input about craft development needs, spotting when someone’s design skills are stagnating, and helping identify growth opportunities that the Design Manager might miss.

Design Manager tends to:

- Career conversations and growth planning

- Team psychological safety and dynamics

- Workload management and resource allocation

- Performance reviews and feedback systems

- Creating learning opportunities

Lead Designer supports by:

- Providing craft-specific feedback on team member development

- Identifying design skill gaps and growth opportunities

- Offering design mentorship and guidance

- Signaling when team members are ready for more complex challenges

The Muscular System: Craft & Execution

Primary caretaker: Lead Designer

Supporting role: Design Manager

The muscular system is about strength, coordination, and skill development. When this system is healthy, the team can execute complex design work with precision, maintain consistent quality, and adapt their craft to new challenges.

The Lead Designer is the primary caretaker here. They’re setting design standards, providing craft coaching, and ensuring that shipping work meets the quality bar. They’re the ones who can tell you if a design decision is sound or if we’re solving the right problem.

But the Design Manager plays a crucial supporting role. They’re ensuring the team has the resources and support to do their best craft work, like proper nutrition and recovery time for an athlete.

Lead Designer tends to:

- Definition of design standards and system usage

- Feedback on what design work meets the standard

- Experience direction for the product

- Design decisions and product-wide alignment

- Innovation and craft advancement

Design Manager supports by:

- Ensuring design standards are understood and adopted across the team

- Confirming experience direction is being followed

- Supporting practices and systems that scale without bottlenecking

- Facilitating design alignment across teams

- Providing resources and removing obstacles to great craft work

The Circulatory System: Strategy & Flow

Shared caretakers: Both Design Manager and Lead Designer

The circulatory system is about how information, decisions, and energy flow through the team. When this system is healthy, strategic direction is clear, priorities are aligned, and the team can respond quickly to new opportunities or challenges.

This is where true partnership happens. Both roles are responsible for keeping the circulation strong, but they’re bringing different perspectives to the table.

Lead Designer contributes:

- User needs are met by the product

- Overall product quality and experience

- Strategic design initiatives

- Research-based user needs for each initiative

Design Manager contributes:

- Communication to team and stakeholders

- Stakeholder management and alignment

- Cross-functional team accountability

- Strategic business initiatives

Both collaborate on:

- Co-creation of strategy with leadership

- Team goals and prioritization approach

- Organizational structure decisions

- Success measures and frameworks

Keeping the Organism Healthy

The key to making this partnership sing is understanding that all three systems need to work together. A team with great craft skills but poor psychological safety will burn out. A team with great culture but weak craft execution will ship mediocre work. A team with both but poor strategic circulation will work hard on the wrong things.

Be Explicit About Which System You’re Tending

When you’re in a meeting about a design problem, it helps to acknowledge which system you’re primarily focused on. “I’m thinking about this from a team capacity perspective” (nervous system) or “I’m looking at this through the lens of user needs” (muscular system) gives everyone context for your input.

This isn’t about staying in your lane. It’s about being transparent as to which lens you’re using, so the other person knows how to best add their perspective.

Create Healthy Feedback Loops

The most successful partnerships I’ve seen establish clear feedback loops between the systems:

Nervous system signals to muscular system: “The team is struggling with confidence in their design skills” → Lead Designer provides more craft coaching and clearer standards.

Muscular system signals to nervous system: “The team’s craft skills are advancing faster than their project complexity” → Design Manager finds more challenging growth opportunities.

Both systems signal to circulatory system: “We’re seeing patterns in team health and craft development that suggest we need to adjust our strategic priorities.”

Handle Handoffs Gracefully

The most critical moments in this partnership are when something moves from one system to another. This might be when a design standard (muscular system) needs to be rolled out across the team (nervous system), or when a strategic initiative (circulatory system) needs specific craft execution (muscular system).

Make these transitions explicit. “I’ve defined the new component standards. Can you help me think through how to get the team up to speed?” or “We’ve agreed on this strategic direction. I’m going to focus on the specific user experience approach from here.”

Stay Curious, Not Territorial

The Design Manager who never thinks about craft, or the Lead Designer who never considers team dynamics, is like a doctor who only looks at one body system. Great design leadership requires both people to care about the whole organism, even when they’re not the primary caretaker.

This means asking questions rather than making assumptions. “What do you think about the team’s craft development in this area?” or “How do you see this impacting team morale and workload?” keeps both perspectives active in every decision.

When the Organism Gets Sick

Even with clear roles, this partnership can go sideways. Here are the most common failure modes I’ve seen:

System Isolation

The Design Manager focuses only on the nervous system and ignores craft development. The Lead Designer focuses only on the muscular system and ignores team dynamics. Both people retreat to their comfort zones and stop collaborating.

The symptoms: Team members get mixed messages, work quality suffers, morale drops.

The treatment: Reconnect around shared outcomes. What are you both trying to achieve? Usually it’s great design work that ships on time from a healthy team. Figure out how both systems serve that goal.

Poor Circulation

Strategic direction is unclear, priorities keep shifting, and neither role is taking responsibility for keeping information flowing.

The symptoms: Team members are confused about priorities, work gets duplicated or dropped, deadlines are missed.

The treatment: Explicitly assign responsibility for circulation. Who’s communicating what to whom? How often? What’s the feedback loop?

Autoimmune Response

One person feels threatened by the other’s expertise. The Design Manager thinks the Lead Designer is undermining their authority. The Lead Designer thinks the Design Manager doesn’t understand craft.

The symptoms: Defensive behavior, territorial disputes, team members caught in the middle.

The treatment: Remember that you’re both caretakers of the same organism. When one system fails, the whole team suffers. When both systems are healthy, the team thrives.

The Payoff

Yes, this model requires more communication. Yes, it requires both people to be secure enough to share responsibility for team health. But the payoff is worth it: better decisions, stronger teams, and design work that’s both excellent and sustainable.

When both roles are healthy and working well together, you get the best of both worlds: deep craft expertise and strong people leadership. When one person is out sick, on vacation, or overwhelmed, the other can help maintain the team’s health. When a decision requires both the people perspective and the craft perspective, you’ve got both right there in the room.

Most importantly, the framework scales. As your team grows, you can apply the same system thinking to new challenges. Need to launch a design system? Lead Designer tends to the muscular system (standards and implementation), Design Manager tends to the nervous system (team adoption and change management), and both tend to circulation (communication and stakeholder alignment).

The Bottom Line

The relationship between a Design Manager and Lead Designer isn’t about dividing territories. It’s about multiplying impact. When both roles understand they’re tending to different aspects of the same healthy organism, magic happens.

The mind and body work together. The team gets both the strategic thinking and the craft excellence they need. And most importantly, the work that ships to users benefits from both perspectives.

So the next time you’re in that meeting room, wondering why two people are talking about the same problem from different angles, remember: you’re watching shared leadership in action. And if it’s working well, both the mind and body of your design team are getting stronger.

The Marvel Cinematic Universe Plot Threads That Fizzled Out

Part of the novelty of the Marvel Cinematic Universe has been the franchise’s ability to throw out new developments and hooks, only to pick up on them again in a sequel, spinoff, or some other project. Whether it’s something as grand as name-dropping the Avengers as a concept or something more subtle, like questioning the […]

The post The Marvel Cinematic Universe Plot Threads That Fizzled Out appeared first on Den of Geek.

Superman may be still playing in theaters and may have just hit HBO Max, but director and DC Studios co-CEO James Gunn‘s already looking to the future. Not only has he announced a follow-up titled Man of Tomorrow, but he also took to Instagram to share a picture of a draft of the completed script.

On first glance, there’s nothing remarkable about the image. It has the title Man of Tomorrow, an appellation for Superman that can also apply to Lex Luthor, whom, Gunn has stated, will be a co-protagonist of the film. There’s the author credits, one for Gunn and a “Superman created by” credit for Jerry Siegel and Joe Shuster, reflecting the director’s commitment to the character’s comic book roots. But what does jump out is the picture on the cover, that of an old-timey medical drawing showing the human brain.

For long-time Superman fans, the picture teases the arrival of a villain that movie fans have long wanted to see: Brainiac.

Brainiac is one of the oldest baddies in Superman’s rogues gallery. He made his first appearance in 1958’s Action Comics #242, written by Otto Binder and penciled by Al Plastino. Although more of a traditional villain in that story, complete with a monkey henchman and a tendency to call Superman “Punyman,” Brainiac already demonstrated scientific genius and a predilection for conducting experiments on sentient beings.

cnx({

playerId: “106e33c0-3911-473c-b599-b1426db57530”,

}).render(“0270c398a82f44f49c23c16122516796”);

});

Over the decades, Brainiac would evolve, becoming more of a living computer that sometimes took over human hosts. Those hosts included carnival magician Milton Fine and, in Alan Moore‘s Whatever Happened to the Man of Tomorrow?, he bonded with Lex Luthor himself.

It’s that last example that leaps to mind when one reads the title for Gunn’s upcoming movie. In Whatever Happened to the Man of Tomorrow?, Moore pens the final story of the Silver Age Superman, right before the DC Comics company-wide reboot Crisis on Infinite Earths. Although the overall villain is the imp Mister Mxyzptlk, who tires of toying with Superman and decides to become sadistic, Luthor and Brainiac bond after the latter finds the former crashed in the antarctic.

If Gunn does indeed bring Brainiac to the big screen, he’ll reverse a trend that has been running through Superman movies since the Christopher Reeve era. While Lex Luthor has been Superman’s arch-enemy since the 1940s, Brainiac has always been a top-tier threat and one that filmmakers have long wanted to feature in a movie.

Before he was pushed out by producers, Richard Donner intended Brainiac to be the antagonist of a third movie. When Donner was replaced by Richard Lester and Richard Pryor was added to the cast, the villain became a Luthor knock-off with a computer that goes haywire. In 2010, information leaked about Bryan Singer’s intended sequel for Superman Returns, which was to involve an alien invader revealed late in the film to be Brainiac, the being responsible for Krypton’s destruction. And had he not been forced to leap into world-building with Batman v Superman: Dawn of Justice, Zack Snyder considered Brainiac as part of a Man of Steel sequel.

As these examples demonstrate, Brainiac looms large in filmmakers’ imagination, and with good reason. Alien invaders always make for cool visuals, especially when their M.O. includes shrinking cities and putting them in bottles. Brainiac can also be a more physical foe for Superman, as his constant need to upgrade allows him to create bigger and more imposing bodies.

For whatever reason, no one has been able to make Brainiac work in a movie. But if there’s anyone who can do it, it’s James Gunn, the guy who has somehow turned Peacemaker into a beloved and emotionally rich character.

Man of Tomorrow is slated for a 2027 release.

The post Superman 2 Might Finally Bring A Long-Awaited DC Villain to the Big Screen appeared first on Den of Geek.

Superman 2 Might Finally Bring A Long-Awaited DC Villain to the Big Screen

Superman may be still playing in theaters and may have just hit HBO Max, but director and DC Studios co-CEO James Gunn‘s already looking to the future. Not only has he announced a follow-up titled Man of Tomorrow, but he also took to Instagram to share a picture of a draft of the completed script. […]

The post Superman 2 Might Finally Bring A Long-Awaited DC Villain to the Big Screen appeared first on Den of Geek.

Superman may be still playing in theaters and may have just hit HBO Max, but director and DC Studios co-CEO James Gunn‘s already looking to the future. Not only has he announced a follow-up titled Man of Tomorrow, but he also took to Instagram to share a picture of a draft of the completed script.

On first glance, there’s nothing remarkable about the image. It has the title Man of Tomorrow, an appellation for Superman that can also apply to Lex Luthor, whom, Gunn has stated, will be a co-protagonist of the film. There’s the author credits, one for Gunn and a “Superman created by” credit for Jerry Siegel and Joe Shuster, reflecting the director’s commitment to the character’s comic book roots. But what does jump out is the picture on the cover, that of an old-timey medical drawing showing the human brain.

For long-time Superman fans, the picture teases the arrival of a villain that movie fans have long wanted to see: Brainiac.

Brainiac is one of the oldest baddies in Superman’s rogues gallery. He made his first appearance in 1958’s Action Comics #242, written by Otto Binder and penciled by Al Plastino. Although more of a traditional villain in that story, complete with a monkey henchman and a tendency to call Superman “Punyman,” Brainiac already demonstrated scientific genius and a predilection for conducting experiments on sentient beings.

cnx({

playerId: “106e33c0-3911-473c-b599-b1426db57530”,

}).render(“0270c398a82f44f49c23c16122516796”);

});

Over the decades, Brainiac would evolve, becoming more of a living computer that sometimes took over human hosts. Those hosts included carnival magician Milton Fine and, in Alan Moore‘s Whatever Happened to the Man of Tomorrow?, he bonded with Lex Luthor himself.

It’s that last example that leaps to mind when one reads the title for Gunn’s upcoming movie. In Whatever Happened to the Man of Tomorrow?, Moore pens the final story of the Silver Age Superman, right before the DC Comics company-wide reboot Crisis on Infinite Earths. Although the overall villain is the imp Mister Mxyzptlk, who tires of toying with Superman and decides to become sadistic, Luthor and Brainiac bond after the latter finds the former crashed in the antarctic.

If Gunn does indeed bring Brainiac to the big screen, he’ll reverse a trend that has been running through Superman movies since the Christopher Reeve era. While Lex Luthor has been Superman’s arch-enemy since the 1940s, Brainiac has always been a top-tier threat and one that filmmakers have long wanted to feature in a movie.

Before he was pushed out by producers, Richard Donner intended Brainiac to be the antagonist of a third movie. When Donner was replaced by Richard Lester and Richard Pryor was added to the cast, the villain became a Luthor knock-off with a computer that goes haywire. In 2010, information leaked about Bryan Singer’s intended sequel for Superman Returns, which was to involve an alien invader revealed late in the film to be Brainiac, the being responsible for Krypton’s destruction. And had he not been forced to leap into world-building with Batman v Superman: Dawn of Justice, Zack Snyder considered Brainiac as part of a Man of Steel sequel.

As these examples demonstrate, Brainiac looms large in filmmakers’ imagination, and with good reason. Alien invaders always make for cool visuals, especially when their M.O. includes shrinking cities and putting them in bottles. Brainiac can also be a more physical foe for Superman, as his constant need to upgrade allows him to create bigger and more imposing bodies.

For whatever reason, no one has been able to make Brainiac work in a movie. But if there’s anyone who can do it, it’s James Gunn, the guy who has somehow turned Peacemaker into a beloved and emotionally rich character.

Man of Tomorrow is slated for a 2027 release.

The post Superman 2 Might Finally Bring A Long-Awaited DC Villain to the Big Screen appeared first on Den of Geek.

Bugonia Review: Emma Stone Transforms in Disturbing Sci-Fi Comedy

About 40 years ago, a New York senator and lifelong politician named Daniel Patrick Moynihan said, “Everyone is entitled to his own opinion, but not his own facts.” It was as catchy then as now, with the phrase lingering still in the zeitgeist like a faded bumper stick tagline. Yet if the ongoing collapse of […]

The post Bugonia Review: Emma Stone Transforms in Disturbing Sci-Fi Comedy appeared first on Den of Geek.

Superman may be still playing in theaters and may have just hit HBO Max, but director and DC Studios co-CEO James Gunn‘s already looking to the future. Not only has he announced a follow-up titled Man of Tomorrow, but he also took to Instagram to share a picture of a draft of the completed script.

On first glance, there’s nothing remarkable about the image. It has the title Man of Tomorrow, an appellation for Superman that can also apply to Lex Luthor, whom, Gunn has stated, will be a co-protagonist of the film. There’s the author credits, one for Gunn and a “Superman created by” credit for Jerry Siegel and Joe Shuster, reflecting the director’s commitment to the character’s comic book roots. But what does jump out is the picture on the cover, that of an old-timey medical drawing showing the human brain.

For long-time Superman fans, the picture teases the arrival of a villain that movie fans have long wanted to see: Brainiac.

Brainiac is one of the oldest baddies in Superman’s rogues gallery. He made his first appearance in 1958’s Action Comics #242, written by Otto Binder and penciled by Al Plastino. Although more of a traditional villain in that story, complete with a monkey henchman and a tendency to call Superman “Punyman,” Brainiac already demonstrated scientific genius and a predilection for conducting experiments on sentient beings.

cnx({

playerId: “106e33c0-3911-473c-b599-b1426db57530”,

}).render(“0270c398a82f44f49c23c16122516796”);

});

Over the decades, Brainiac would evolve, becoming more of a living computer that sometimes took over human hosts. Those hosts included carnival magician Milton Fine and, in Alan Moore‘s Whatever Happened to the Man of Tomorrow?, he bonded with Lex Luthor himself.

It’s that last example that leaps to mind when one reads the title for Gunn’s upcoming movie. In Whatever Happened to the Man of Tomorrow?, Moore pens the final story of the Silver Age Superman, right before the DC Comics company-wide reboot Crisis on Infinite Earths. Although the overall villain is the imp Mister Mxyzptlk, who tires of toying with Superman and decides to become sadistic, Luthor and Brainiac bond after the latter finds the former crashed in the antarctic.

If Gunn does indeed bring Brainiac to the big screen, he’ll reverse a trend that has been running through Superman movies since the Christopher Reeve era. While Lex Luthor has been Superman’s arch-enemy since the 1940s, Brainiac has always been a top-tier threat and one that filmmakers have long wanted to feature in a movie.

Before he was pushed out by producers, Richard Donner intended Brainiac to be the antagonist of a third movie. When Donner was replaced by Richard Lester and Richard Pryor was added to the cast, the villain became a Luthor knock-off with a computer that goes haywire. In 2010, information leaked about Bryan Singer’s intended sequel for Superman Returns, which was to involve an alien invader revealed late in the film to be Brainiac, the being responsible for Krypton’s destruction. And had he not been forced to leap into world-building with Batman v Superman: Dawn of Justice, Zack Snyder considered Brainiac as part of a Man of Steel sequel.

As these examples demonstrate, Brainiac looms large in filmmakers’ imagination, and with good reason. Alien invaders always make for cool visuals, especially when their M.O. includes shrinking cities and putting them in bottles. Brainiac can also be a more physical foe for Superman, as his constant need to upgrade allows him to create bigger and more imposing bodies.

For whatever reason, no one has been able to make Brainiac work in a movie. But if there’s anyone who can do it, it’s James Gunn, the guy who has somehow turned Peacemaker into a beloved and emotionally rich character.

Man of Tomorrow is slated for a 2027 release.

The post Superman 2 Might Finally Bring A Long-Awaited DC Villain to the Big Screen appeared first on Den of Geek.

Asynchronous Design Critique: Giving Feedback

Feedback, in whichever form it takes, and whatever it may be called, is one of the most effective soft skills that we have at our disposal to collaboratively get our designs to a better place while growing our own skills and perspectives.

Feedback is also one of the most underestimated tools, and often by assuming that we’re already good at it, we settle, forgetting that it’s a skill that can be trained, grown, and improved. Poor feedback can create confusion in projects, bring down morale, and affect trust and team collaboration over the long term. Quality feedback can be a transformative force.

Practicing our skills is surely a good way to improve, but the learning gets even faster when it’s paired with a good foundation that channels and focuses the practice. What are some foundational aspects of giving good feedback? And how can feedback be adjusted for remote and distributed work environments?

On the web, we can identify a long tradition of asynchronous feedback: from the early days of open source, code was shared and discussed on mailing lists. Today, developers engage on pull requests, designers comment in their favorite design tools, project managers and scrum masters exchange ideas on tickets, and so on.

Design critique is often the name used for a type of feedback that’s provided to make our work better, collaboratively. So it shares a lot of the principles with feedback in general, but it also has some differences.

The content

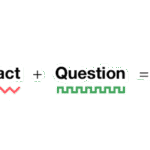

The foundation of every good critique is the feedback’s content, so that’s where we need to start. There are many models that you can use to shape your content. The one that I personally like best—because it’s clear and actionable—is this one from Lara Hogan.

While this equation is generally used to give feedback to people, it also fits really well in a design critique because it ultimately answers some of the core questions that we work on: What? Where? Why? How? Imagine that you’re giving some feedback about some design work that spans multiple screens, like an onboarding flow: there are some pages shown, a flow blueprint, and an outline of the decisions made. You spot something that could be improved. If you keep the three elements of the equation in mind, you’ll have a mental model that can help you be more precise and effective.

Here is a comment that could be given as a part of some feedback, and it might look reasonable at a first glance: it seems to superficially fulfill the elements in the equation. But does it?

Not sure about the buttons’ styles and hierarchy—it feels off. Can you change them?

Observation for design feedback doesn’t just mean pointing out which part of the interface your feedback refers to, but it also refers to offering a perspective that’s as specific as possible. Are you providing the user’s perspective? Your expert perspective? A business perspective? The project manager’s perspective? A first-time user’s perspective?

When I see these two buttons, I expect one to go forward and one to go back.

Impact is about the why. Just pointing out a UI element might sometimes be enough if the issue may be obvious, but more often than not, you should add an explanation of what you’re pointing out.

When I see these two buttons, I expect one to go forward and one to go back. But this is the only screen where this happens, as before we just used a single button and an “×” to close. This seems to be breaking the consistency in the flow.

The question approach is meant to provide open guidance by eliciting the critical thinking in the designer receiving the feedback. Notably, in Lara’s equation she provides a second approach: request, which instead provides guidance toward a specific solution. While that’s a viable option for feedback in general, for design critiques, in my experience, defaulting to the question approach usually reaches the best solutions because designers are generally more comfortable in being given an open space to explore.

The difference between the two can be exemplified with, for the question approach:

When I see these two buttons, I expect one to go forward and one to go back. But this is the only screen where this happens, as before we just used a single button and an “×” to close. This seems to be breaking the consistency in the flow. Would it make sense to unify them?

Or, for the request approach:

When I see these two buttons, I expect one to go forward and one to go back. But this is the only screen where this happens, as before we just used a single button and an “×” to close. This seems to be breaking the consistency in the flow. Let’s make sure that all screens have the same pair of forward and back buttons.

At this point in some situations, it might be useful to integrate with an extra why: why you consider the given suggestion to be better.

When I see these two buttons, I expect one to go forward and one to go back. But this is the only screen where this happens, as before we just used a single button and an “×” to close. This seems to be breaking the consistency in the flow. Let’s make sure that all screens have the same two forward and back buttons so that users don’t get confused.

Choosing the question approach or the request approach can also at times be a matter of personal preference. A while ago, I was putting a lot of effort into improving my feedback: I did rounds of anonymous feedback, and I reviewed feedback with other people. After a few rounds of this work and a year later, I got a positive response: my feedback came across as effective and grounded. Until I changed teams. To my shock, my next round of feedback from one specific person wasn’t that great. The reason is that I had previously tried not to be prescriptive in my advice—because the people who I was previously working with preferred the open-ended question format over the request style of suggestions. But now in this other team, there was one person who instead preferred specific guidance. So I adapted my feedback for them to include requests.

One comment that I heard come up a few times is that this kind of feedback is quite long, and it doesn’t seem very efficient. No… but also yes. Let’s explore both sides.

No, this style of feedback is actually efficient because the length here is a byproduct of clarity, and spending time giving this kind of feedback can provide exactly enough information for a good fix. Also if we zoom out, it can reduce future back-and-forth conversations and misunderstandings, improving the overall efficiency and effectiveness of collaboration beyond the single comment. Imagine that in the example above the feedback were instead just, “Let’s make sure that all screens have the same two forward and back buttons.” The designer receiving this feedback wouldn’t have much to go by, so they might just apply the change. In later iterations, the interface might change or they might introduce new features—and maybe that change might not make sense anymore. Without the why, the designer might imagine that the change is about consistency… but what if it wasn’t? So there could now be an underlying concern that changing the buttons would be perceived as a regression.

Yes, this style of feedback is not always efficient because the points in some comments don’t always need to be exhaustive, sometimes because certain changes may be obvious (“The font used doesn’t follow our guidelines”) and sometimes because the team may have a lot of internal knowledge such that some of the whys may be implied.

So the equation above isn’t meant to suggest a strict template for feedback but a mnemonic to reflect and improve the practice. Even after years of active work on my critiques, I still from time to time go back to this formula and reflect on whether what I just wrote is effective.

The tone

Well-grounded content is the foundation of feedback, but that’s not really enough. The soft skills of the person who’s providing the critique can multiply the likelihood that the feedback will be well received and understood. Tone alone can make the difference between content that’s rejected or welcomed, and it’s been demonstrated that only positive feedback creates sustained change in people.

Since our goal is to be understood and to have a positive working environment, tone is essential to work on. Over the years, I’ve tried to summarize the required soft skills in a formula that mirrors the one for content: the receptivity equation.

Respectful feedback comes across as grounded, solid, and constructive. It’s the kind of feedback that, whether it’s positive or negative, is perceived as useful and fair.

Timing refers to when the feedback happens. To-the-point feedback doesn’t have much hope of being well received if it’s given at the wrong time. Questioning the entire high-level information architecture of a new feature when it’s about to ship might still be relevant if that questioning highlights a major blocker that nobody saw, but it’s way more likely that those concerns will have to wait for a later rework. So in general, attune your feedback to the stage of the project. Early iteration? Late iteration? Polishing work in progress? These all have different needs. The right timing will make it more likely that your feedback will be well received.

Attitude is the equivalent of intent, and in the context of person-to-person feedback, it can be referred to as radical candor. That means checking before we write to see whether what we have in mind will truly help the person and make the project better overall. This might be a hard reflection at times because maybe we don’t want to admit that we don’t really appreciate that person. Hopefully that’s not the case, but that can happen, and that’s okay. Acknowledging and owning that can help you make up for that: how would I write if I really cared about them? How can I avoid being passive aggressive? How can I be more constructive?

Form is relevant especially in a diverse and cross-cultural work environments because having great content, perfect timing, and the right attitude might not come across if the way that we write creates misunderstandings. There might be many reasons for this: sometimes certain words might trigger specific reactions; sometimes nonnative speakers might not understand all the nuances of some sentences; sometimes our brains might just be different and we might perceive the world differently—neurodiversity must be taken into consideration. Whatever the reason, it’s important to review not just what we write but how.

A few years back, I was asking for some feedback on how I give feedback. I received some good advice but also a comment that surprised me. They pointed out that when I wrote “Oh, […],” I made them feel stupid. That wasn’t my intent! I felt really bad, and I just realized that I provided feedback to them for months, and every time I might have made them feel stupid. I was horrified… but also thankful. I made a quick fix: I added “oh” in my list of replaced words (your choice between: macOS’s text replacement, aText, TextExpander, or others) so that when I typed “oh,” it was instantly deleted.

Something to highlight because it’s quite frequent—especially in teams that have a strong group spirit—is that people tend to beat around the bush. It’s important to remember here that a positive attitude doesn’t mean going light on the feedback—it just means that even when you provide hard, difficult, or challenging feedback, you do so in a way that’s respectful and constructive. The nicest thing that you can do for someone is to help them grow.

We have a great advantage in giving feedback in written form: it can be reviewed by another person who isn’t directly involved, which can help to reduce or remove any bias that might be there. I found that the best, most insightful moments for me have happened when I’ve shared a comment and I’ve asked someone who I highly trusted, “How does this sound?,” “How can I do it better,” and even “How would you have written it?”—and I’ve learned a lot by seeing the two versions side by side.

The format

Asynchronous feedback also has a major inherent advantage: we can take more time to refine what we’ve written to make sure that it fulfills two main goals: the clarity of communication and the actionability of the suggestions.

Let’s imagine that someone shared a design iteration for a project. You are reviewing it and leaving a comment. There are many ways to do this, and of course context matters, but let’s try to think about some elements that may be useful to consider.

In terms of clarity, start by grounding the critique that you’re about to give by providing context. Specifically, this means describing where you’re coming from: do you have a deep knowledge of the project, or is this the first time that you’re seeing it? Are you coming from a high-level perspective, or are you figuring out the details? Are there regressions? Which user’s perspective are you taking when providing your feedback? Is the design iteration at a point where it would be okay to ship this, or are there major things that need to be addressed first?

Providing context is helpful even if you’re sharing feedback within a team that already has some information on the project. And context is absolutely essential when giving cross-team feedback. If I were to review a design that might be indirectly related to my work, and if I had no knowledge about how the project arrived at that point, I would say so, highlighting my take as external.

We often focus on the negatives, trying to outline all the things that could be done better. That’s of course important, but it’s just as important—if not more—to focus on the positives, especially if you saw progress from the previous iteration. This might seem superfluous, but it’s important to keep in mind that design is a discipline where there are hundreds of possible solutions for every problem. So pointing out that the design solution that was chosen is good and explaining why it’s good has two major benefits: it confirms that the approach taken was solid, and it helps to ground your negative feedback. In the longer term, sharing positive feedback can help prevent regressions on things that are going well because those things will have been highlighted as important. As a bonus, positive feedback can also help reduce impostor syndrome.

There’s one powerful approach that combines both context and a focus on the positives: frame how the design is better than the status quo (compared to a previous iteration, competitors, or benchmarks) and why, and then on that foundation, you can add what could be improved. This is powerful because there’s a big difference between a critique that’s for a design that’s already in good shape and a critique that’s for a design that isn’t quite there yet.

Another way that you can improve your feedback is to depersonalize the feedback: the comments should always be about the work, never about the person who made it. It’s “This button isn’t well aligned” versus “You haven’t aligned this button well.” This is very easy to change in your writing by reviewing it just before sending.

In terms of actionability, one of the best approaches to help the designer who’s reading through your feedback is to split it into bullet points or paragraphs, which are easier to review and analyze one by one. For longer pieces of feedback, you might also consider splitting it into sections or even across multiple comments. Of course, adding screenshots or signifying markers of the specific part of the interface you’re referring to can also be especially useful.

One approach that I’ve personally used effectively in some contexts is to enhance the bullet points with four markers using emojis. So a red square 🟥 means that it’s something that I consider blocking; a yellow diamond 🔶 is something that I can be convinced otherwise, but it seems to me that it should be changed; and a green circle 🟢 is a detailed, positive confirmation. I also use a blue spiral 🌀 for either something that I’m not sure about, an exploration, an open alternative, or just a note. But I’d use this approach only on teams where I’ve already established a good level of trust because if it happens that I have to deliver a lot of red squares, the impact could be quite demoralizing, and I’d reframe how I’d communicate that a bit.

Let’s see how this would work by reusing the example that we used earlier as the first bullet point in this list: